Real-Time Data Threat Detection: How Organizations Protect Sensitive Data

Real-time data threat detection is the continuous monitoring of data access, movement, and behavior to identify and stop security threats as they occur. In 2026, this capability is essential as sensitive data flows across hybrid cloud environments, AI pipelines, and complex multi-platform architectures.

As organizations adopt AI technologies at scale, real-time data threat detection has evolved from a reactive security measure into a proactive, intelligence-driven discipline. Modern systems continuously monitor data movement and access patterns to identify emerging vulnerabilities before sensitive information is compromised, helping organizations maintain security posture, ensure compliance, and safeguard business continuity.

These systems leverage artificial intelligence, behavioral analytics, and continuous monitoring to establish baselines of normal behavior across vast data estates. Rather than relying solely on known attack signatures, they detect subtle anomalies that signal emerging risks, including unauthorized data exfiltration and shadow AI usage.

How Real-Time Data Threat Detection Software Works

Real-time data threat detection software operates by continuously analyzing activity across cloud platforms, endpoints, networks, and data repositories to identify high-risk behavior as it happens. Rather than relying on static rules alone, these systems correlate signals from multiple sources to build a unified view of data activity across the environment.

A key capability of modern detection platforms is behavioral modeling at scale. By establishing baselines for users, applications, and systems, the software can identify deviations such as unexpected access patterns, irregular data transfers, or activity from unusual locations. These anomalies are evaluated in real time using artificial intelligence, machine learning, and predefined policies to determine potential security risk.

What differentiates modern real-time data threat detection software is its ability to operate at petabyte scale without requiring sensitive data to be moved or duplicated. In-place scanning preserves performance and privacy while enabling comprehensive visibility. Automated response mechanisms allow security teams to contain threats quickly, reducing the likelihood of data exposure, downtime, and regulatory impact.

AI-Driven Threat Detection Systems

AI-driven threat detection systems enhance real-time data security by identifying complex, multi-stage attack patterns that traditional rule-based approaches cannot detect. Rather than evaluating isolated events, these systems analyze relationships across user behavior, data access, system activity, and contextual signals to surface high-risk scenarios in real time.

By applying machine learning, deep learning, and natural language processing, AI-driven systems can detect subtle deviations that emerge across multiple data points, even when individual signals appear benign. This allows organizations to uncover sophisticated threats such as insider misuse, advanced persistent threats, lateral movement, and novel exploit techniques earlier in the attack lifecycle.

Once a potential threat is identified, automated prioritization and response mechanisms accelerate remediation. Actions such as isolating affected resources, restricting access, or alerting security teams can be triggered immediately, significantly reducing detection-to-response time compared to traditional security models. Over time, AI-driven systems continuously refine their detection models using new behavioral data and outcomes. This adaptive learning reduces false positives, improves accuracy, and enables a scalable security posture capable of responding to evolving threats in dynamic cloud and AI-driven environments.

Tracking Data Movement and Data Lineage

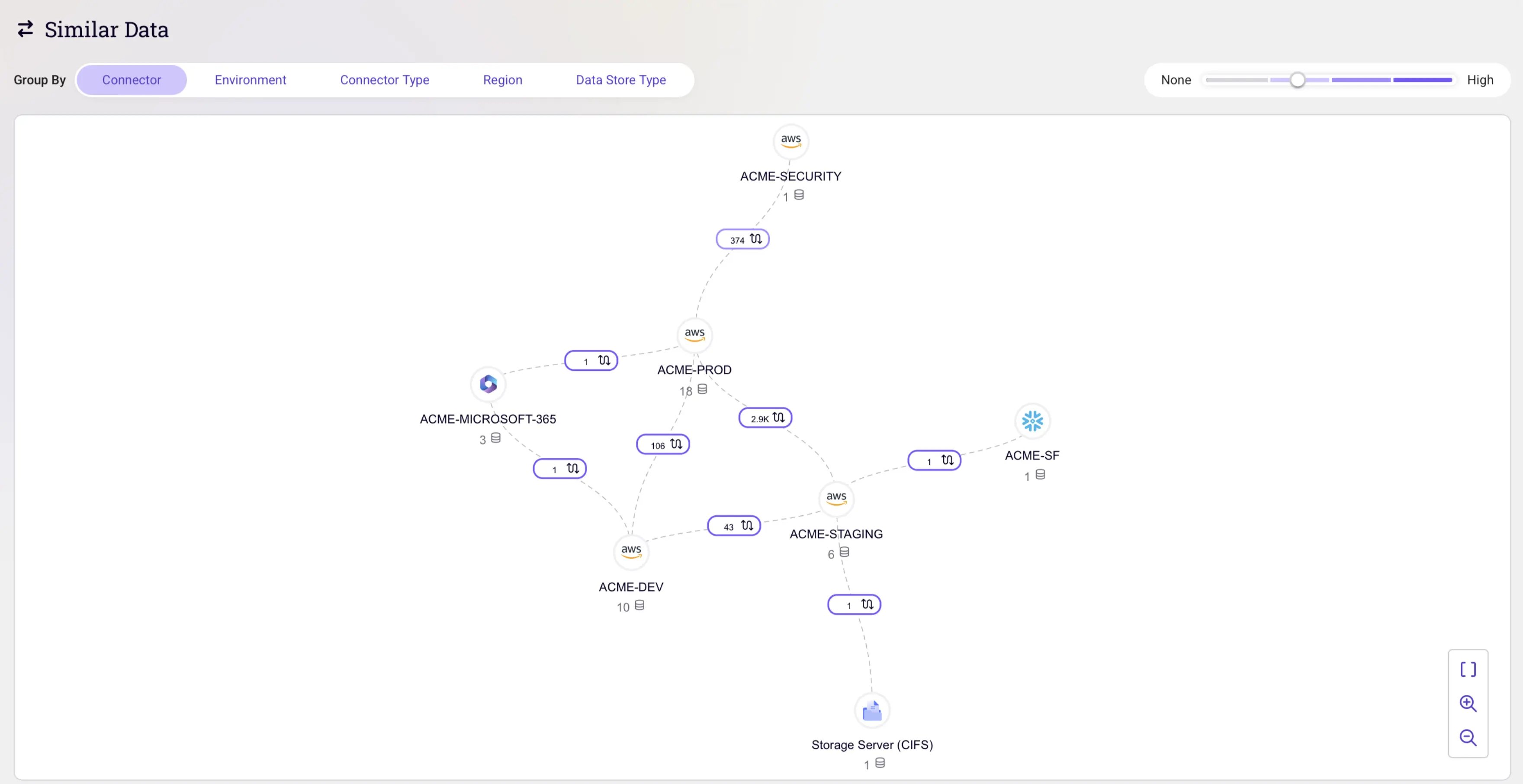

Beyond identifying where sensitive data resides at a single point in time, modern data security platforms track data movement across its entire lifecycle. This visibility is critical for detecting when sensitive data flows between regions, across environments (such as from production to development), or into AI pipelines where it may be exposed to unauthorized processing.

By maintaining continuous data lineage and audit trails, these platforms monitor activity across cloud data stores, including ETL processes, database migrations, backups, and data transformations. Rather than relying on static snapshots, lineage tracking reveals dynamic data flows, showing how sensitive information is accessed, transformed, and relocated across the enterprise in real time.

In the AI era, tracking data movement is especially important as data is frequently duplicated and reused to train or power machine learning models. These capabilities allow organizations to detect when authorized data is connected to unauthorized large language models or external AI tools, commonly referred to as shadow AI, one of the fastest-growing risks to data security in 2026.

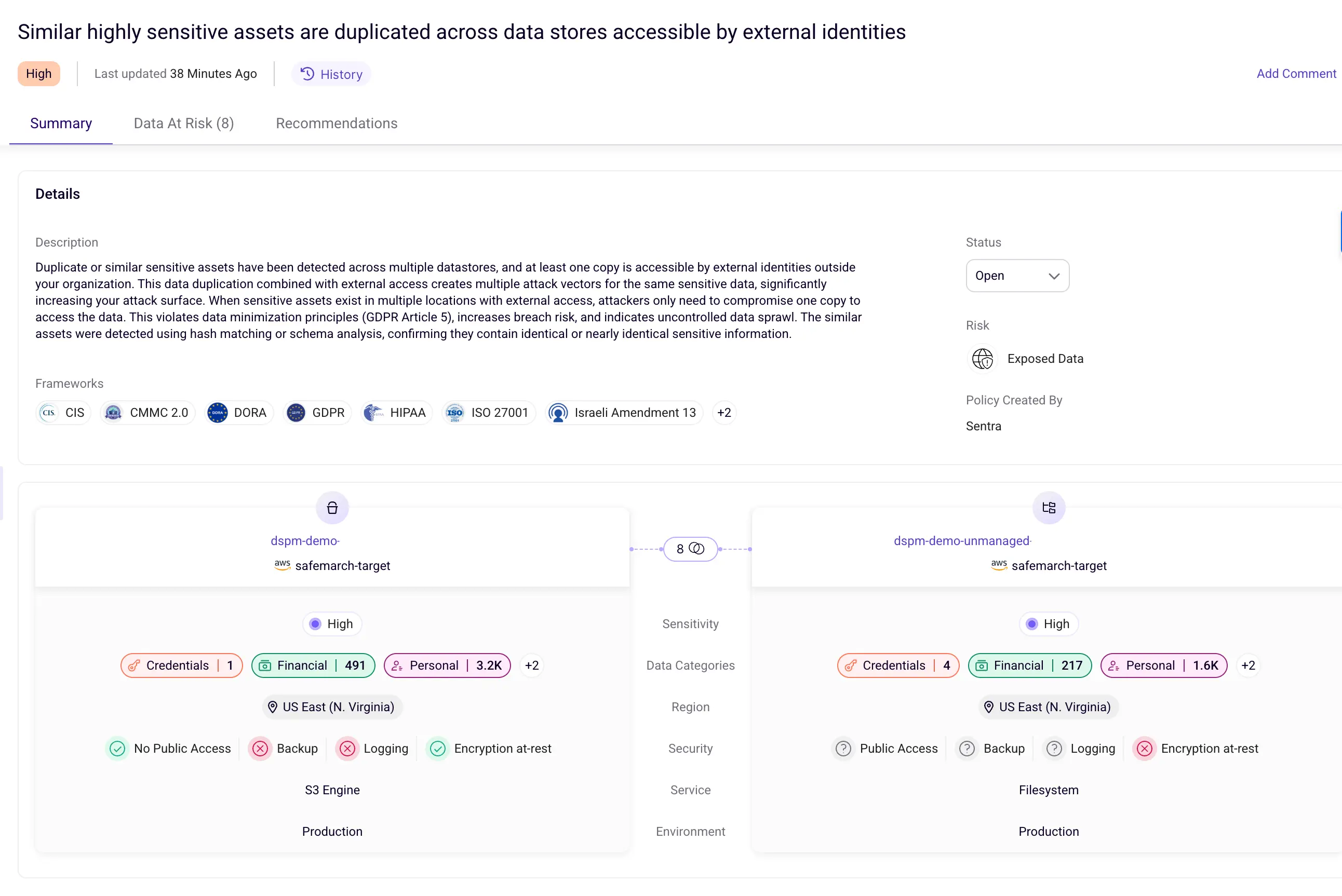

Identifying Toxic Combinations and Over-Permissioned Access

Toxic combinations occur when highly sensitive data is protected by overly broad or misconfigured access controls, creating elevated risk. These scenarios are especially dangerous because they place critical data behind permissive access, effectively increasing the potential blast radius of a security incident.

Advanced data security platforms identify toxic combinations by correlating data sensitivity with access permissions in real time. The process begins with automated data classification, using AI-powered techniques to identify sensitive information such as personally identifiable information (PII), financial data, intellectual property, and regulated datasets.

Once data is classified, access structures are analyzed to uncover over-permissioned configurations. This includes detecting global access groups (such as “Everyone” or “Authenticated Users”), excessive sharing permissions, and privilege creep where users accumulate access beyond what their role requires.

When sensitive data is found in environments with permissive access controls, these intersections are flagged as toxic risks. Risk scoring typically accounts for factors such as data sensitivity, scope of access, user behavior patterns, and missing safeguards like multi-factor authentication, enabling security teams to prioritize remediation effectively.

Detecting Shadow AI and Unauthorized Data Connections

Shadow AI refers to the use of unauthorized or unsanctioned AI tools and large language models that are connected to sensitive organizational data without security or IT oversight. As AI adoption accelerates in 2026, detecting these hidden data connections has become a critical component of modern data threat detection. Detection of shadow AI begins with continuous discovery and inventory of AI usage across the organization, including both approved and unapproved tools.

Advanced platforms employ multiple detection techniques to identify unauthorized AI activity, such as:

- Scanning unstructured data repositories to identify model files or binaries associated with unsanctioned AI deployments

- Analyzing email and identity signals to detect registrations and usage notifications from external AI services

- Inspecting code repositories for embedded API keys or calls to external AI platforms

- Monitoring cloud-native AI services and third-party model hosting platforms for unauthorized data connections

To provide comprehensive coverage, leading systems combine AI Security Posture Management (AISPM) with AI runtime protection. AISPM maps which sensitive data is being accessed, by whom, and under what conditions, while runtime protection continuously monitors AI interactions, such as prompts, responses, and agent behavior—to detect misuse or anomalous activity in real time.

When risky behavior is detected, including attempts to connect sensitive data to unauthorized AI models, automated alerts are generated for investigation. In high-risk scenarios, remediation actions such as revoking access tokens, blocking network connections, or disabling data integrations can be triggered immediately to prevent further exposure.

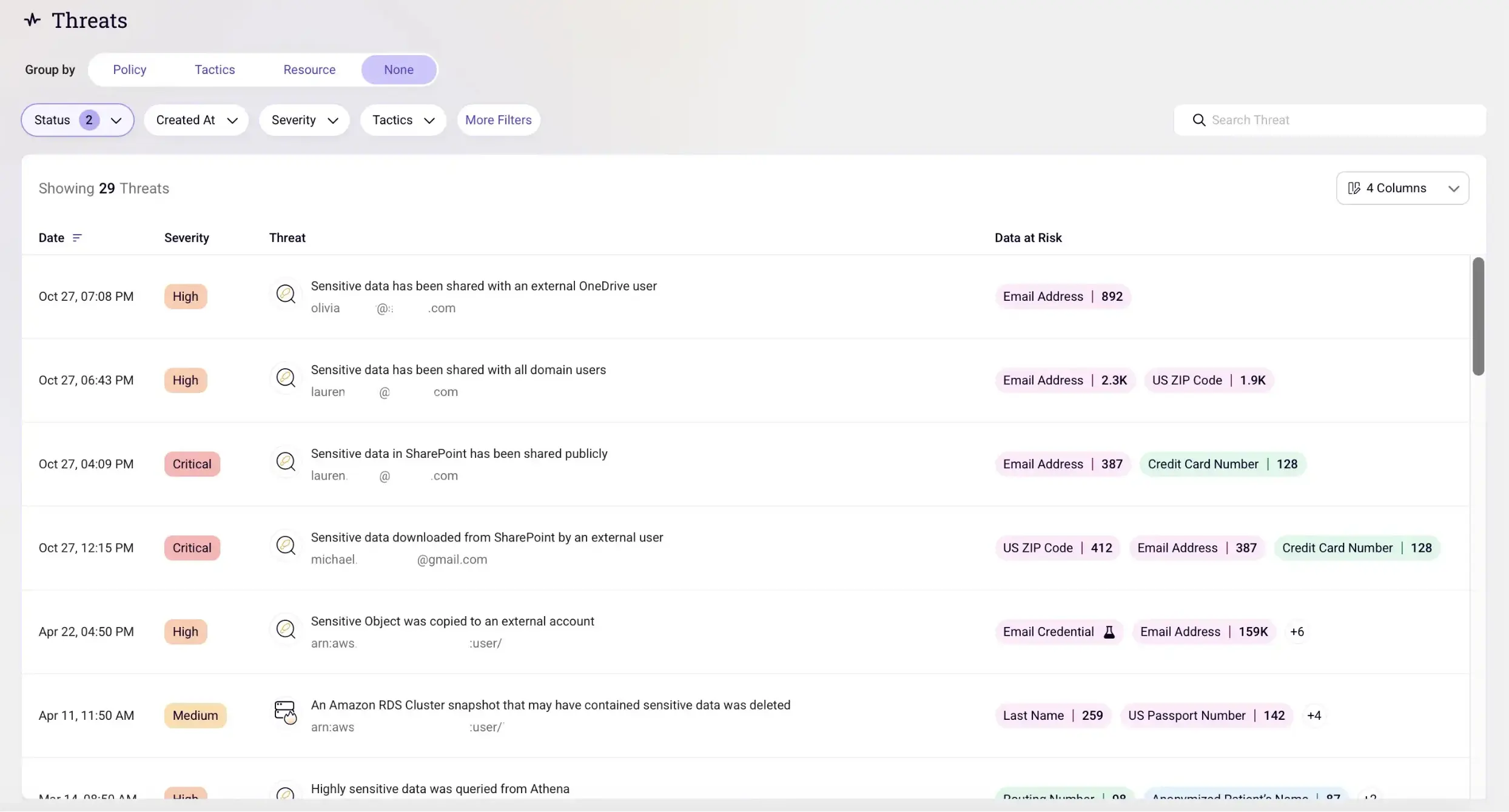

Real-Time Threat Monitoring and Response

Real-time threat monitoring and response form the operational core of modern data security, enabling organizations to detect suspicious activity and take action immediately as threats emerge. Rather than relying on periodic reviews or delayed investigations, these capabilities allow security teams to respond while incidents are still unfolding. Continuous monitoring aggregates signals from across the environment, including network activity, system logs, cloud configurations, and user behavior. This unified visibility allows systems to maintain up-to-date behavioral baselines and identify deviations such as unusual access attempts, unexpected data transfers, or activity occurring outside normal usage patterns.

Advanced analytics powered by AI and machine learning evaluate these signals in real time to distinguish benign anomalies from genuine threats. This approach is particularly effective at identifying complex attack scenarios, including insider misuse, zero-day exploits, and multi-stage campaigns that evolve gradually and evade traditional point-in-time detection.

When high-risk activity is detected, automated alerting and response mechanisms accelerate containment. Actions such as isolating affected resources, blocking malicious traffic, or revoking compromised credentials can be initiated within seconds, significantly reducing the window of exposure and limiting potential impact compared to manual response processes.

Sentra’s Approach to Real-Time Data Threat Detection

Sentra applies real-time data threat detection through a cloud-native platform designed to deliver continuous visibility and control without moving sensitive data outside the customer’s environment. By performing discovery, classification, and analysis in place across hybrid, private, and cloud environments, Sentra enables organizations to monitor data risk while preserving performance and privacy.

At the core of this approach is DataTreks™, which provides a contextual map of the entire data estate. DataTreks tracks where sensitive data resides and how it moves across ETL processes, database migrations, backups, and AI pipelines. This lineage-driven visibility allows organizations to identify risky data flows across regions, environments, and unauthorized destinations.

Sentra identifies toxic combinations by correlating data sensitivity with access controls in real time. The platform’s AI-powered classification engine accurately identifies sensitive information and maps these findings against permission structures to pinpoint scenarios where high-value data is exposed through overly broad or misconfigured access controls.

For shadow AI detection, Sentra continuously monitors data flows across the enterprise, including data sources accessed by AI tools and services. The system routinely audits AI interactions and compares them against a curated inventory of approved tools and integrations. When unauthorized connections are detected—such as sensitive data being fed into unapproved large language models (LLMs), automated alerts are generated with granular contextual details, enabling rapid investigation and remediation.

User Reviews (January 2026):

What Users Like:

- Data discovery capabilities and comprehensive reporting

- Fast, context-aware data security with reduced manual effort

- Ability to identify sensitive data and prioritize risks efficiently

- Significant improvements in security posture and compliance

Key Benefits:

- Unified visibility across IaaS, PaaS, SaaS, and on-premise file shares

- Approximately 20% reduction in cloud storage costs by eliminating shadow and ROT data

Conclusion: Real-Time Data Threat Detection in 2026

Real-time data threat detection has become an essential capability for organizations navigating the complex security challenges of the AI era. By combining continuous monitoring, AI-powered analytics, comprehensive data lineage tracking, and automated response capabilities, modern platforms enable enterprises to detect and neutralize threats before they result in data breaches or compliance violations.

As sensitive data continues to proliferate across hybrid environments and AI adoption accelerates, the ability to maintain real-time visibility and control over data security posture will increasingly differentiate organizations that thrive from those that struggle with persistent security incidents and regulatory challenges.

<blogcta-big>