Ofir Yehoshua

Ofir is the Senior Backend Architect and Head of Platform at Sentra, where he leads the design and development of scalable, high-performance systems. With deep expertise in cloud architecture, distributed systems, and modern backend technologies, he drives platform innovation that powers Sentra’s growth.

Name's Data Security Posts

Why Infrastructure Security Is Not Enough to Protect Sensitive Data

Why Infrastructure Security Is Not Enough to Protect Sensitive Data

For years, security programs have focused on protecting infrastructure: networks, servers, endpoints, and applications. That approach made sense when systems were static and data rarely moved. It’s no longer enough.

Recent breach data shows a consistent pattern. Organizations detect incidents, restore systems, and close tickets, yet remain unable to answer the most important question regulators and customers often ask:

Where does my sensitive data reside?

Who or what has access to this data and are they authorized?

Which specific sensitive datasets were accessed or exfiltrated?

Infrastructure security alone cannot answer that question.

Infrastructure Alerts Detect Events, Not Impact

Most security tooling is infrastructure-centric by design. SIEMs, EDRs, NDRs, and CSPM tools monitor hosts, processes, IPs, and configurations. When something abnormal happens, they generate alerts.

What they do not tell you is:

- Which specific datasets were accessed

- Whether those datasets contained PHI or PII

- Whether sensitive data was copied, moved, or exfiltrated

Traditional tools monitor the "plumbing" (network traffic, server logs, etc.) While they can flag that a database was accessed by an unauthorized IP, they often cannot distinguish between an attacker downloading a public template or downloading a table containing 50,000 Social Security numbers. An alert is not the same as understanding the exposure of the data stored inside it. Without that context, incident response teams are forced to infer impact rather than determine it.

The “Did They Access the Data?” Problem

This gap becomes pronounced during ransomware and extortion incidents.

In many cases:

- Operations are restored from backups

- Infrastructure is rebuilt

- Access is reduced

- (Hopefully!) attackers are removed from the environment

Yet organizations still cannot confirm whether sensitive data was accessed or exfiltrated during the dwell time.

Without data-level visibility:

- Legal and compliance teams must assume worst-case exposure

- Breach notifications expand unnecessarily

- Regulatory penalties increase due to uncertainty, not necessarily damage

The inability to scope an incident accurately is not a tooling failure during the breach, it is a visibility failure that existed long before the breach occurred. Under regulations like GDPR or CCPA/CPRA, if an organization cannot prove that sensitive data wasn’t accessed during a breach, they are often legally required to notify all potentially affected parties. This ‘over-notification’ is costly and damaging to reputation.

Data Movement Is the Real Attack Vulnerability

Modern environments are defined by constant data movement:

- Cloud migrations

- SaaS integrations

- App dev lifecycles

- Analytics and ETL pipelines

- AI and ML workflows

Each transition creates blind spots.

Legacy platforms awaiting migration often exist in a “wait state” with reduced monitoring. Data copied into cloud storage or fed into AI pipelines frequently loses lineage and classification context. Posture may vary and traditional controls no longer apply consistently. From an attacker’s perspective, these environments are ideal. From a defender’s perspective, they are blind spots.

Policies Are Not Proof

Most organizations can produce policies stating that sensitive data is encrypted, access-controlled, and monitored. Increasingly, regulators are moving from point-in-time audits to requiring continuous evidence of control.

Regulators are asking for evidence:

- Where does PHI live right now?

- Who or what can access it?

- How do you know this hasn’t changed since the last audit?

Point-in-time audits cannot answer those questions. Neither can static documentation. Exposure and access drift continuously, especially in cloud and AI-driven environments.

Compliance depends on continuous control, not periodic attestation.

What Data-Centric Security Actually Requires

Accurately proving compliance and scoping breach impact requires security visibility that is anchored to the data itself, not the infrastructure surrounding it.

At a minimum, this means:

- Continuous discovery and classification of sensitive data

- Consistent compliance reporting and controls across cloud, SaaS, On-Prem, and migration states

- Clear visibility into which identities, services, and AI tools can access specific datasets

- Detection and response signals tied directly to sensitive data exposure and movement

This is the operational foundation of Data Security Posture Management (DSPM) and Data Detection and Response (DDR). These capabilities do not replace infrastructure security controls; they close the gap those controls leave behind by connecting security events to actual data impact.

This is the problem space Sentra was built to address.

Sentra provides continuous visibility into where sensitive data lives, how it moves, and who or what can access it, and ties security and compliance outcomes to that visibility. Without this layer, organizations are forced to infer breach impact and compliance posture instead of proving it.

Why Data-Centric Security Is Required for Today's Compliance and Breach Response

Infrastructure security can detect that an incident occurred, but it cannot determine which sensitive data was accessed, copied, or exfiltrated. Without data-level evidence, organizations cannot accurately scope breaches, contain risk, or prove compliance, regardless of how many alerts or controls are in place. Modern breach response and regulatory compliance require continuous visibility into sensitive data, its lineage, and its access paths. Infrastructure-only security models are no longer sufficient.

Want to see how Sentra provides complete visibility and control of sensitive data?

<blogcta-big>

How to Gain Visibility and Control in Petabyte-Scale Data Scanning

How to Gain Visibility and Control in Petabyte-Scale Data Scanning

Every organization today is drowning in data - millions of assets spread across cloud platforms, on-premises systems, and an ever-expanding landscape of SaaS tools. Each asset carries value, but also risk. For security and compliance teams, the mandate is clear: sensitive data must be inventoried, managed and protected.

Scanning every asset for security and compliance is no longer optional, it’s the line between trust and exposure, between resilience and chaos.

Many data security tools promise to scan and classify sensitive information across environments. In practice, doing this effectively and at scale, demands more than raw ‘brute force’ scanning power. It requires robust visibility and management capabilities: a cockpit view that lets teams monitor coverage, prioritize intelligently, and strike the right balance between scan speed, cost, and accuracy.

Why Scan Tracking Is Crucial

Scanning is not instantaneous. Depending on the size and complexity of your environment, it can take days - sometimes even weeks to complete. Meanwhile, new data is constantly being created or modified, adding to the challenge.

Without clear visibility into the scanning process, organizations face several critical obstacles:

- Unclear progress: It’s often difficult to know what has already been scanned, what is currently in progress, and what remains pending. This lack of clarity creates blind spots that undermine confidence in coverage.

- Time estimation gaps: In large environments, it’s hard to know how long scans will take because so many factors come into play — the number of assets, their size, the type of data - structured, semi-structured, or unstructured, and how much scanner capacity is available. As a result, predicting when you’ll reach full coverage is tricky. This becomes especially stressful when scans need to be completed before a fixed deadline, like a compliance audit.

"With Sentra’s Scan Dashboard, we were able to quickly scale up our scanners to meet a tight audit deadline, finish on time, and then scale back down to save costs. The visibility and control it gave us made the whole process seamless”, said CISO of Large Retailer. - Poor prioritization: Not all environments or assets carry the same importance. Yet without visibility into scan status, teams struggle to balance historical scans of existing assets with the ongoing influx of newly created data, making it nearly impossible to prioritize effectively based on risk or business value.

Sentra’s End-to-End Scanning Workflow

Managing scans at petabyte scale is complex. Sentra streamlines the process with a workflow built for scale, clarity, and control that features:

1. Comprehensive Asset Discovery

Before scanning even begins, Sentra automatically discovers assets across cloud platforms, on-premises systems, and SaaS applications. This ensures teams have a complete, up-to-date inventory and visual map of their data landscape, so no environment or data store is overlooked.

Example: New S3 buckets, a freshly deployed BigQuery dataset, or a newly connected SharePoint site are automatically identified and added to the inventory.

2. Configurable Scan Management

Administrators can fine-tune how scans are executed to meet their organization’s needs. With flexible configuration options, such as number of scanners, sampling rates, and prioritization rules - teams can strike the right balance between scan speed, coverage, and cost control.

For instance, compliance-critical assets can be scanned at full depth immediately, while less critical environments can run at reduced sampling to save on compute consumption and costs.

3. Real-Time Scan Dashboard

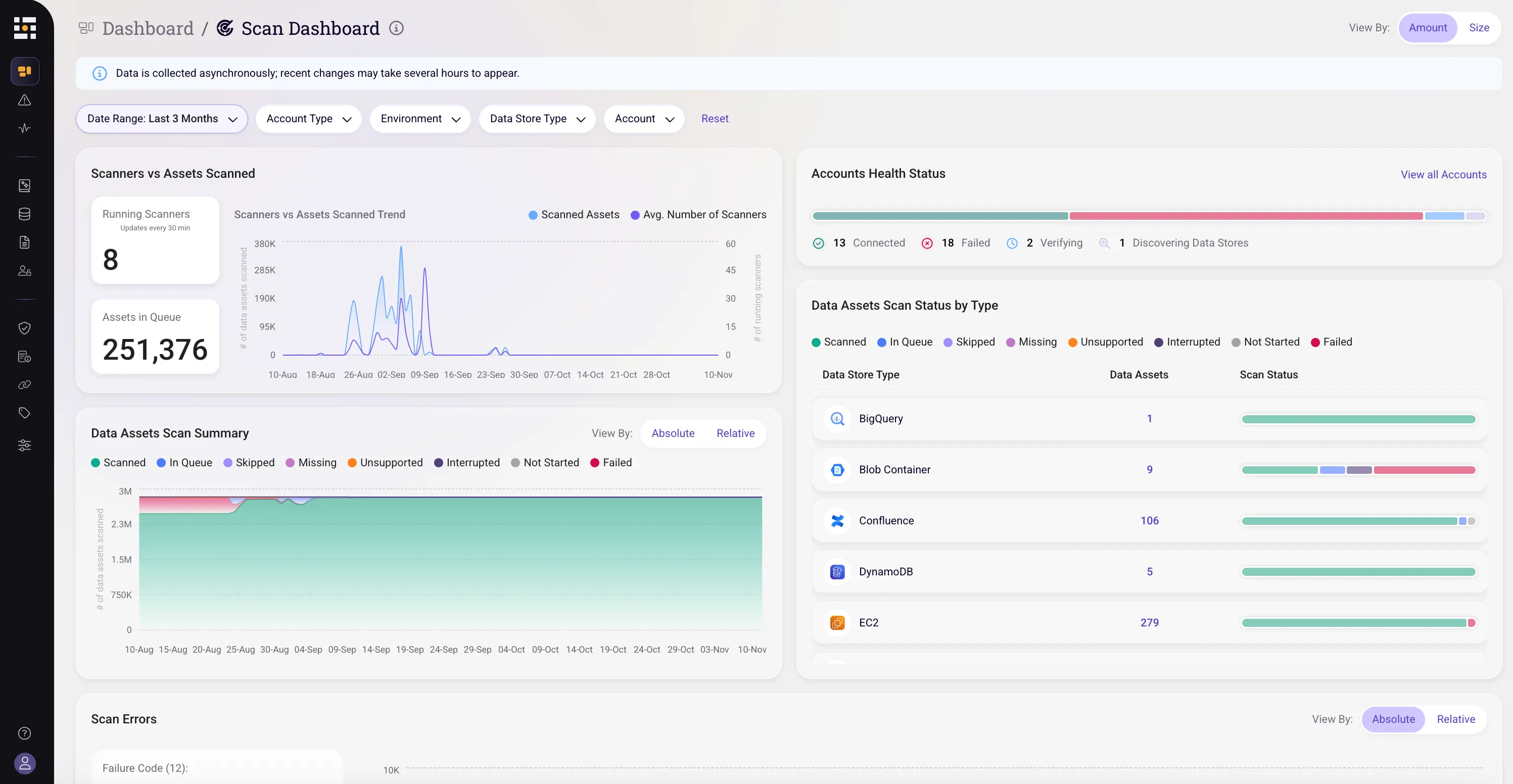

Sentra’s unified Scan Dashboard provides a cockpit view into scanning operations, so teams always know where they stand. Key features include:

- Daily scan throughput correlated with the number of active scanners, helping teams understand efficiency and predict completion times.

- Coverage tracking that visualizes overall progress and highlights which assets remain unscanned.

- Decision-making tools that allow teams to dynamically adjust, whether by adding scanner capacity, changing sampling rates, or reordering priorities when new high-risk assets appear.

Handling Data Changes

The challenge doesn’t end once the initial scans are complete. Data is dynamic, new files are added daily, existing records are updated, and sensitive information shifts locations. Sentra’s activity feeds give teams the visibility they need to understand how their data landscape is evolving and adapt their data security strategies in real time.

Conclusion

Tracking scan status at scale is complex but critical to any data security strategy. Sentra provides an end-to-end view and unmatched scan control, helping organizations move from uncertainty to confidence with clear prediction of scan timelines, faster troubleshooting, audit-ready compliance, and smarter, cost-efficient decisions for securing data.

<blogcta-big>