New AI-Assistant, Sentra Jagger, Is a Game Changer for DSPM and DDR

Evolution of Large Language Models (LLMs)

In the early 2000s, as Google, Yahoo, and others gained widespread popularity. Users found the search engine to be a convenient tool, effortlessly bringing a wealth of information to their fingertips. Fast forward to the 2020s, and we see Large Language Models (LLMs) are pushing productivity to the next level. LLMs skip the stage of learning, seamlessly bridging the gap between technology and the user.

LLMs create a natural interface between the user and the platform. By interpreting natural language queries, they effortlessly translate human requests into software actions and technical operations. This simplifies technology to make it close to invisible. Users no longer need to understand the technology itself, or how to get certain data — they can just input any query, and LLMs will simplify it.

Revolutionizing Cloud Data Security With Sentra Jagger

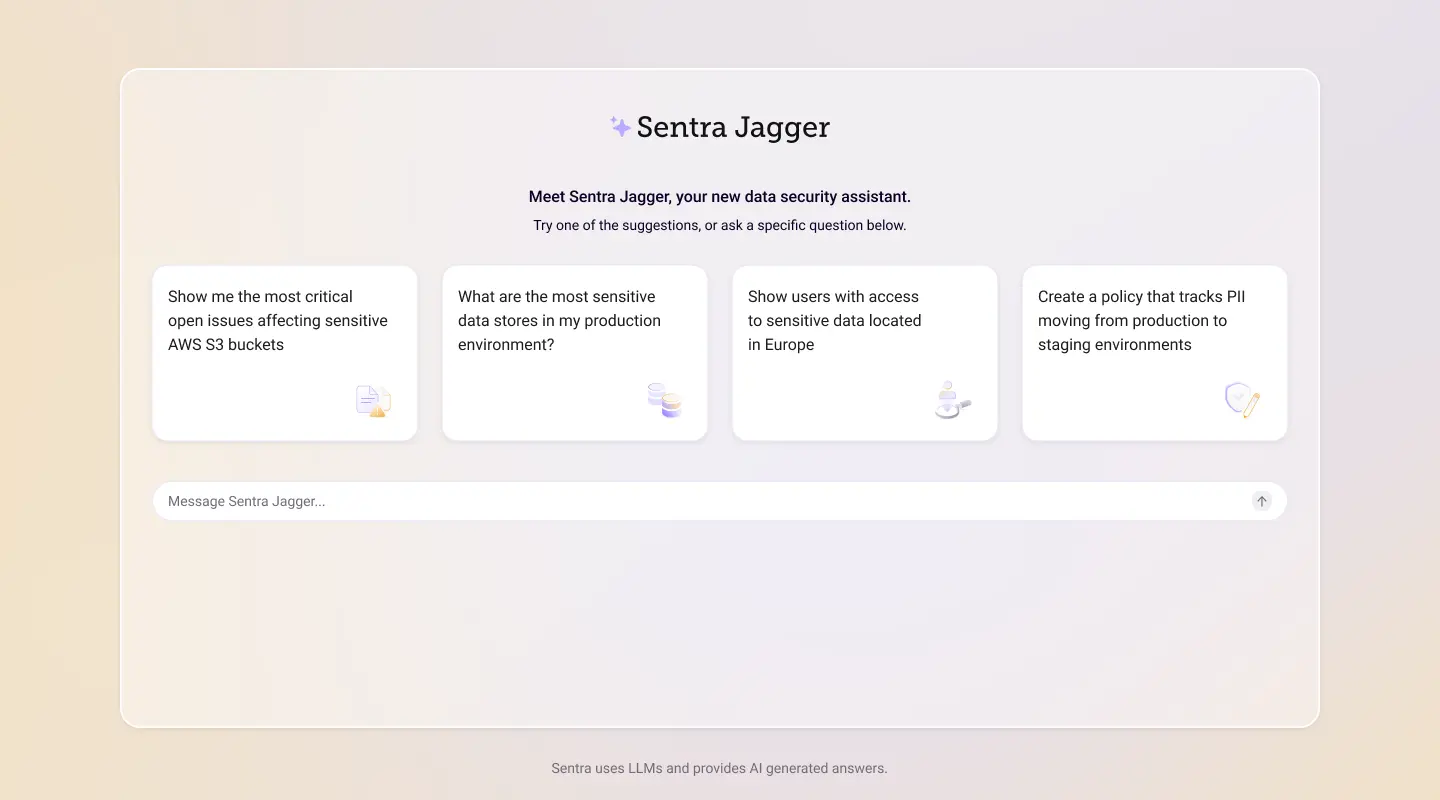

Sentra Jagger is an industry-first AI assistant for cloud data security based on the Large Language Model (LLM).

It enables users to quickly analyze and respond to security threats, cutting task times by up to 80% by answering data security questions, including policy customization and enforcement, customizing settings, creating new data classifiers, and reports for compliance. By reducing the time for investigating and addressing security threats, Sentra Jagger enhances operational efficiency and reinforces security measures.

Empowering security teams, users can access insights and recommendations on specific security actions using an interactive, user-friendly interface. Customizable dashboards, tailored to user roles and preferences, enhance visibility into an organization's data. Users can directly inquire about findings, eliminating the need to navigate through complicated portals or ancillary information.

Benefits of Sentra Jagger

- Accessible Security Insights: Simplified interpretation of complex security queries, offering clear and concise explanations in plain language to empower users across different levels of expertise. This helps users make informed decisions swiftly, and confidently take appropriate actions.

- Enhanced Incident Response: Clear steps to identify and fix issues, offering users clear steps to identify and fix issues, making the process faster and minimizing downtime, damage, and restoring normal operations promptly.

- Unified Security Management: Integration with existing tools, creating a unified security management experience and providing a complete view of the organization's data security posture. Jagger also speeds solution customization and tuning.

Why Sentra Jagger Is Changing the Game for DSPM and DDR

Sentra Jagger is an essential tool for simplifying the complexities of both Data Security Posture Management (DSPM) and Data Detection and Response (DDR) functions. DSPM discovers and accurately classifies your sensitive data anywhere in the cloud environment, understands who can access this data, and continuously assesses its vulnerability to security threats and risk of regulatory non-compliance. DDR focuses on swiftly identifying and responding to security incidents and emerging threats, ensuring that the organization’s data remains secure. With their ability to interpret natural language, LLMs, such as Sentra Jagger, serve as transformative agents in bridging the comprehension gap between cybersecurity professionals and the intricate worlds of DSPM and DDR.

Data Security Posture Management (DSPM)

When it comes to data security posture management (DSPM), Sentra Jagger empowers users to articulate security-related queries in plain language, seeking insights into cybersecurity strategies, vulnerability assessments, and proactive threat management.

The language models not only comprehend the linguistic nuances but also translate these queries into actionable insights, making data security more accessible to a broader audience. This democratization of security knowledge is a pivotal step forward, enabling organizations to empower diverse teams (including privacy, governance, and compliance roles) to actively engage in bolstering their data security posture without requiring specialized cybersecurity training.

Data Detection and Response (DDR)

In the realm of data detection and response (DDR), Sentra Jagger contributes to breaking down technical barriers by allowing users to interact with the platform to seek information on DDR configurations, real-time threat detection, and response strategies. Our AI-powered assistant transforms DDR-related technical discussions into accessible conversations, empowering users to understand and implement effective threat protection measures without grappling with the intricacies of data detection and response technologies.

The integration of LLMs into the realms of DSPM and DDR marks a paradigm shift in how users will interact with and comprehend complex cybersecurity concepts. Their role as facilitators of knowledge dissemination removes traditional barriers, fostering widespread engagement with advanced security practices.

Sentra Jagger is a game changer by making advanced technological knowledge more inclusive, allowing organizations and individuals to fortify their cybersecurity practices with unprecedented ease. It helps security teams better communicate with and integrate within the rest of the business. As AI-powered assistants continue to evolve, so will their impact to reshape the accessibility and comprehension of intricate technological domains.

How CISOs Can Leverage Sentra Jagger

Consider a Chief Information Security Officer (CISO) in charge of cybersecurity at a healthcare company. To assess the security policies governing sensitive data in their environment, the CISO leverages Sentra’s Jagger AI assistant.. If the CISO, let's call her Sara, needs to navigate through the Sentra policy page, instead of manually navigating, Sara can simply queryJagger, asking, "What policies are defined in my environment?" In response, Jagger provides a comprehensive list of policies, including their names, descriptions, active issues, creation dates, and status (enabled or disabled).

Sara can then add a custom policy related to GDPR, by simply describing it. For example, "add a policy that tracks European customer information moving outside of Europe". Sentra Jagger will translate the request using Natural Language Processing (NLP) into a Sentra policy and inform Sara about potential non-compliant data movement based on the recently added policy.

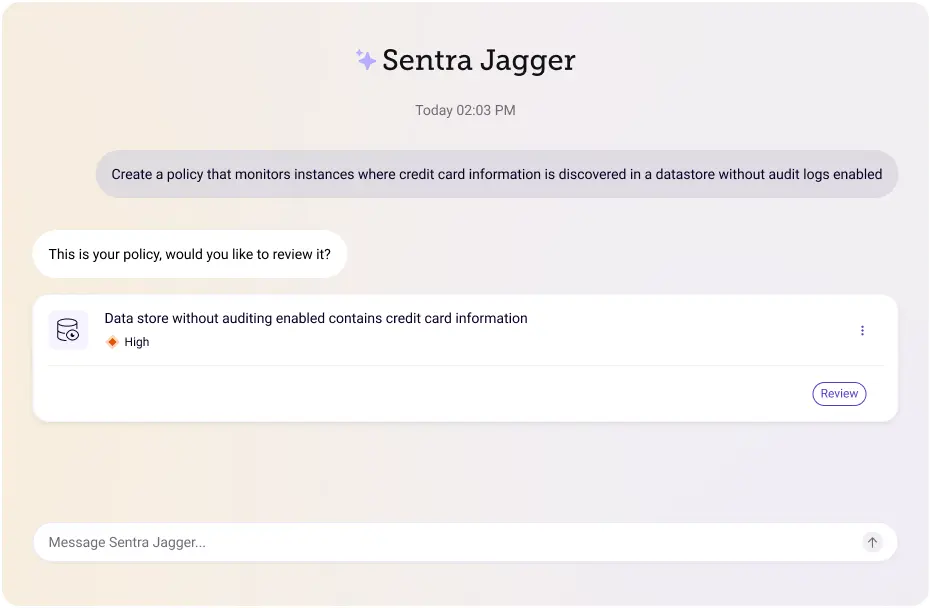

Upon thorough review, Sara identifies a need for a new policy: "Create a policy that monitors instances where credit card information is discovered in a datastore without audit logs enabled." Sentra Jagger initiates the process of adding this policy by prompting Sara for additional details and confirmation.

The LLM-assistant, Sentra Jagger, communicates, "Hi Sara, it seems like a valuable policy to add. Credit card information should never be stored in a datastore without audit logs enabled. To ensure the policy aligns with your requirements, I need more information. Can you specify the severity of alerts you want to raise and any compliance standards associated with this policy?" Sara responds, stating, "I want alerts to be raised as high severity, and I want the AWS CIS benchmark to be associated with it."

Having captured all the necessary information, Sentra Jagger compiles a summary of the proposed policy and sends it to Sara for her review and confirmation. After Sara confirms the details, the LLM-assistant, Sentra Jagger seamlessly incorporates the new policy into the system. This streamlined interaction with LLMs enhances the efficiency of policy management for CISOs, enabling them to easily navigate, customize, and implement security measures in their organizations.

Conclusion

The advent of Large Language Models (LLMs) has changed the way we interact with and understand technology. Building on the legacy of search engines, LLMs eliminate the learning curve, seamlessly translating natural language queries into software and technical actions. This innovation removes friction between users and technology, making intricate systems nearly invisible to the end user.

For Chief Information Security Officers (CISOs) and ITSecOps, LLMs offer a game-changing approach to cybersecurity. By interpreting natural language queries, Sentra Jagger bridges the comprehension gap between cybersecurity professionals and the intricate worlds of DSPM and DDR. This standardization of security knowledge allows organizations to empower a wider audience to actively engage in bolstering their data security posture and responding to security incidents, revolutionizing the cybersecurity landscape.

To learn more about Sentra, schedule a demo with one of our experts.