Safeguarding Data Integrity and Privacy in the Age of AI-Powered Large Language Models (LLMs)

In the burgeoning realm of artificial intelligence (AI), Large Language Models (LLMs) have emerged as transformative tools, enabling the development of applications that revolutionize customer experiences and streamline business operations. These sophisticated models, trained on massive volumes of text data, can generate human-quality text, translate languages, write creative content, and answer complex questions.

Unfortunately, the rapid adoption of LLMs - coupled with their extensive data consumption - has introduced critical challenges around data integrity, privacy, and access control during both training and inference. As organizations operationalize LLMs at scale in 2025, addressing these risks has become essential to responsible AI adoption.

What’s Changed in LLM Security in 2025

LLM security in 2025 looks fundamentally different from earlier adoption phases. While initial concerns focused primarily on prompt injection and output moderation, today’s risk profile is dominated by data exposure, identity misuse, and over-privileged AI systems.

Several shifts now define the modern LLM security landscape:

- Retrieval-augmented generation (RAG) has become the default architecture, dynamically connecting LLMs to internal data stores and increasing the risk of sensitive data exposure at inference time.

- Fine-tuning and continual training on proprietary data are now common, expanding the blast radius of data leakage or poisoning incidents.

- Agentic AI and tool-calling capabilities introduce new attack surfaces, where excessive permissions can enable unintended actions across cloud services and SaaS platforms.

- Multi-model and hybrid AI environments complicate data governance, access control, and visibility across LLM workflows.

As a result, securing LLMs in 2025 requires more than static policies or point-in-time reviews. Organizations must adopt continuous data discovery, least-privilege access enforcement, and real-time monitoring to protect sensitive data throughout the LLM lifecycle.

Challenges: Navigating the Risks of LLM Training

Against this backdrop, the training of LLMs often involves the use of vast datasets containing sensitive information such as personally identifiable information (PII), intellectual property, and financial records. This concentration of valuable data presents a compelling target for malicious actors seeking to exploit vulnerabilities and gain unauthorized access.

One of the primary challenges is preventing data leakage or public disclosure. LLMs can inadvertently disclose sensitive information if not properly configured or protected. This disclosure can occur through various means, such as unauthorized access to training data, vulnerabilities in the LLM itself, or improper handling of user inputs.

Another critical concern is avoiding overly permissive configurations. LLMs can be configured to allow users to provide inputs that may contain sensitive information. If these inputs are not adequately filtered or sanitized, they can be incorporated into the LLM's training data, potentially leading to the disclosure of sensitive information.

Finally, organizations must be mindful of the potential for bias or error in LLM training data. Biased or erroneous data can lead to biased or erroneous outputs from the LLM, which can have detrimental consequences for individuals and organizations.

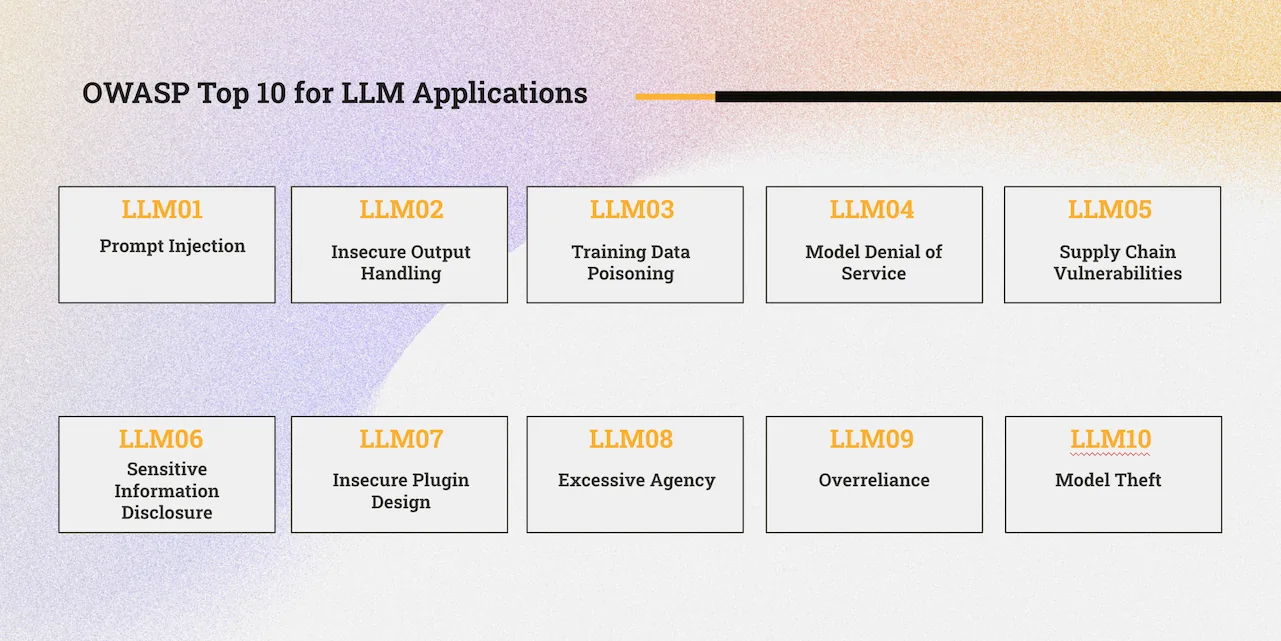

OWASP Top 10 for LLM Applications

The OWASP Top 10 for LLM Applications identifies and prioritizes critical vulnerabilities that can arise in LLM applications. Among these, LLM03 Training Data Poisoning, LLM06 Sensitive Information Disclosure, LLM08 Excessive Agency, and LLM10 Model Theft pose significant risks that cybersecurity professionals must address. Let's dive into these:

LLM03: Training Data Poisoning

LLM03 addresses the vulnerability of LLMs to training data poisoning, a malicious attack where carefully crafted data is injected into the training dataset to manipulate the model's behavior. This can lead to biased or erroneous outputs, undermining the model's reliability and trustworthiness.

The consequences of LLM03 can be severe. Poisoned models can generate biased or discriminatory content, perpetuating societal prejudices and causing harm to individuals or groups. Moreover, erroneous outputs can lead to flawed decision-making, resulting in financial losses, operational disruptions, or even safety hazards.

LLM06: Sensitive Information Disclosure

LLM06 highlights the vulnerability of LLMs to inadvertently disclosing sensitive information present in their training data. This can occur when the model is prompted to generate text or code that includes personally identifiable information (PII), trade secrets, or other confidential data.

The potential consequences of LLM06 are far-reaching. Data breaches can lead to financial losses, reputational damage, and regulatory penalties. Moreover, the disclosure of sensitive information can have severe implications for individuals, potentially compromising their privacy and security.

LLM08: Excessive Agency

LLM08 focuses on the risk of LLMs exhibiting excessive agency, meaning they may perform actions beyond their intended scope or generate outputs that cause harm or offense. This can manifest in various ways, such as the model generating discriminatory or biased content, engaging in unauthorized financial transactions, or even spreading misinformation.

Excessive agency poses a significant threat to organizations and society as a whole. Supply chain compromises and excessive permissions to AI-powered apps can erode trust, damage reputations, and even lead to legal or regulatory repercussions. Moreover, the spread of harmful or offensive content can have detrimental social impacts.

LLM10: Model Theft

LLM10 highlights the risk of model theft, where an adversary gains unauthorized access to a trained LLM or its underlying intellectual property. This can enable the adversary to replicate the model's capabilities for malicious purposes, such as generating misleading content, impersonating legitimate users, or conducting cyberattacks.

Model theft poses significant threats to organizations. The loss of intellectual property can lead to financial losses and competitive disadvantages. Moreover, stolen models can be used to spread misinformation, manipulate markets, or launch targeted attacks on individuals or organizations.

Recommendations: Adopting Responsible Data Protection Practices

To mitigate the risks associated with LLM training data, organizations must adopt a comprehensive approach to data protection. This approach should encompass data hygiene, policy enforcement, access controls, and continuous monitoring.

Data hygiene is essential for ensuring the integrity and privacy of LLM training data. Organizations should implement stringent data cleaning and sanitization procedures to remove sensitive information and identify potential biases or errors.

Policy enforcement is crucial for establishing clear guidelines for the handling of LLM training data. These policies should outline acceptable data sources, permissible data types, and restrictions on data access and usage.

Access controls should be implemented to restrict access to LLM training data to authorized personnel and identities only, including third party apps that may connect. This can be achieved through role-based access control (RBAC), zero-trust IAM, and multi-factor authentication (MFA) mechanisms.

Continuous monitoring is essential for detecting and responding to potential threats and vulnerabilities. Organizations should implement real-time monitoring tools to identify suspicious activity and take timely action to prevent data breaches.

Solutions: Leveraging Technology to Safeguard Data

In the rush to innovate, developers must remain keenly aware of the inherent risks involved with training LLMs if they wish to deliver responsible, effective AI that does not jeopardize their customer's data. Specifically, it is a foremost duty to protect the integrity and privacy of LLM training data sets, which often contain sensitive information.

Preventing data leakage or public disclosure, avoiding overly permissive configurations, and negating bias or error that can contaminate such models should be top priorities.

Technological solutions play a pivotal role in safeguarding data integrity and privacy during LLM training. Data security posture management (DSPM) solutions can automate data security processes, enabling organizations to maintain a comprehensive data protection posture.

DSPM solutions provide a range of capabilities, including data discovery, data classification, data access governance (DAG), and data detection and response (DDR). These capabilities help organizations identify sensitive data, enforce access controls, detect data breaches, and respond to security incidents.

Cloud-native DSPM solutions offer enhanced agility and scalability, enabling organizations to adapt to evolving data security needs and protect data across diverse cloud environments.

Sentra: Automating LLM Data Security Processes

Having to worry about securing yet another threat vector should give overburdened security teams pause. But help is available.

Sentra has developed a data privacy and posture management solution that can automatically secure LLM training data in support of rapid AI application development.

The solution works in tandem with AWS SageMaker, GCP Vertex AI, or other AI IDEs to support secure data usage within ML training activities. The solution combines key capabilities including DSPM, DAG, and DDR to deliver comprehensive data security and privacy.

Its cloud-native design discovers all of your data and ensures good data hygiene and security posture via policy enforcement, least privilege access to sensitive data, and monitoring and near real-time alerting to suspicious identity (user/app/machine) activity, such as data exfiltration, to thwart attacks or malicious behavior early. The solution frees developers to innovate quickly and for organizations to operate with agility to best meet requirements, with confidence that their customer data and proprietary information will remain protected.

LLMs are now also built into Sentra’s classification engine and data security platform to provide unprecedented classification accuracy for unstructured data. Learn more about Large Language Models (LLMs) here.

Conclusion: Securing the Future of AI with Data Privacy

AI holds immense potential to transform our world, but its development and deployment must be accompanied by a steadfast commitment to data integrity and privacy. Protecting the integrity and privacy of data in LLMs is essential for building responsible and ethical AI applications. By implementing data protection best practices, organizations can mitigate the risks associated with data leakage, unauthorized access, and bias. Sentra's DSPM solution provides a comprehensive approach to data security and privacy, enabling organizations to develop and deploy LLMs with speed and confidence.

If you want to learn more about Sentra's Data Security Platform and how LLMs are now integrated into our classification engine to deliver unmatched accuracy for unstructured data, request a demo today.

<blogcta-big>