What is Sensitive Data Exposure and How to Prevent It

What is Sensitive Data Exposure?

Sensitive data exposure occurs when security measures fail to protect sensitive information from external and internal threats. This leads to unauthorized disclosure of private and confidential data. Attackers often target personal data, such as financial information and healthcare records, as it is valuable and exploitable.

Security teams play a critical role in mitigating sensitive data exposures. They do this by implementing robust security measures. This includes eliminating malicious software, enforcing strong encryption standards, and enhancing access controls. Yet, even with the most sophisticated security measures in place, data breaches can still occur. They often happen through the weakest links in the system.

Organizations must focus on proactive measures to prevent data exposures. They should also put in place responsive strategies to effectively address breaches. By combining proactive and responsive measures, as stated below, organizations can protect sensitive data exposure. They can also maintain the trust of their customers.

Difference Between Data Exposure and Data Breach

Both data exposure and data breaches involve unauthorized access or disclosure of sensitive information. However, they differ in their intent and the underlying circumstances.

Data Exposure

Data exposure occurs when sensitive information is inadvertently disclosed or made accessible to unauthorized individuals or entities. This exposure can happen due to various factors. These include misconfigured systems, human error, or inadequate security measures. Data exposure is typically unintentional. The exposed data may not be actively targeted or exploited.

Data Breach

A data breach, on the other hand, is a deliberate act of unauthorized access to sensitive information with the intent to steal, manipulate, or exploit it. Data breaches are often carried out by cybercriminals or malicious actors seeking financial gain, identity theft, or to disrupt an organization's operations.

Key Differences

The table below summarizes the key differences between sensitive data exposure and data breaches:

Types of Sensitive Data Exposure

Attackers relentlessly pursue sensitive data. They create increasingly sophisticated and inventive methods to breach security systems and compromise valuable information. Their motives range from financial gain to disruption of operations. Ultimately, this causes harm to individuals and organizations alike. There are three main types of data breaches that can compromise sensitive information:

Availability Breach

An availability breach occurs when authorized users are temporarily or permanently denied access to sensitive data. Ransomware commonly uses this method to extort organizations. Such disruptions can impede business operations and hinder essential services. They can also result in financial losses. Addressing and mitigating these breaches is essential to ensure uninterrupted access and business continuity.

Confidentiality Breach

A confidentiality breach occurs when unauthorized entities access sensitive data, infringing upon its privacy and confidentiality. The consequences can be severe. They can include financial fraud, identity theft, reputational harm, and legal repercussions. It's crucial to maintain strong security measures. Doing so prevents breaches and preserves sensitive information's integrity.

Integrity Breach

An integrity breach occurs when unauthorized individuals or entities alter or modify sensitive data. AI LLM training is particularly vulnerable to this breach form. This compromises the data's accuracy and reliability. This manipulation of data can result in misinformation, financial losses, and diminished trust in data quality. Vigilant measures are essential to protect data integrity. They also help reduce the impact of breaches.

How Sensitive Data Gets Exposed

Sensitive data, including vital information like Personally Identifiable Information (PII), financial records, and healthcare data, forms the backbone of contemporary organizations. Unfortunately, weak encryption, unreliable application programming interfaces, and insufficient security practices from development and security teams can jeopardize this invaluable data. Such lapses lead to critical vulnerabilities, exposing sensitive data at three crucial points:

Data in Transit

Data in transit refers to the transfer of data between locations, such as from a user's device to a server or between servers. This data is a prime target for attackers due to its often unencrypted state, making it vulnerable to interception. Key factors contributing to data exposure in transit include weak encryption, insecure protocols, and the risk of man-in-the-middle attacks. It is crucial to address these vulnerabilities to enhance the security of data during transit.

Data at Rest

While data at rest is less susceptible to interception than data in transit, it remains vulnerable to attacks. Enterprises commonly face internal exposure to sensitive data when they have misconfigurations or insufficient access controls on data at rest. Oversharing and insufficient access restrictions heighten the risk in data lakes and warehouses that house Personally Identifiable Information (PII). To mitigate this risk, it is important to implement robust access controls and monitoring measures. This ensures restricted access and vigilant tracking of data access patterns.

Data in Use

Data in use is the most vulnerable to attack, as it is often unencrypted and can be accessed by multiple users and applications. When working in cloud computing environments, dev teams usually gather the data and cache it within the mounts or in-memory to boost performance and reduce I/O. Such data causes sensitive data exposure vulnerabilities as other teams or cloud providers can access the data. The security teams need to adopt standard data handling practices. For example, they should clean the data from third-party or cloud mounts after use and disable caching.

What Causes Sensitive Data Exposure?

Sensitive data exposure results from a combination of internal and external factors. Internally, DevSecOps and Business Analytics teams play a significant role in unintentional data exposures. External threats usually come from hackers and malicious actors. Mitigating these risks requires a comprehensive approach to safeguarding data integrity and maintaining a resilient security posture.

Internal Causes of Sensitive Data Exposure

- No or Weak Encryption: Encryption and decryption algorithms are the keys to safeguarding data. Sensitive data exposures occur due to weak cryptography protocols. They also occur due to a lack of encryption or hashing mechanisms.

- Insecure Passwords: Insecure password practices and insufficient validation checks compromise enterprise security, facilitating data exposure.

- Unsecured Web Pages: JSON payloads get delivered from web servers to frontend API handlers. Attackers can easily exploit the data transaction between the server and client when users browse unsecure web pages with weak SSL and TLS certificates.

- Poor Access Controls and Misconfigurations: Insufficient multi-factor authentication (MFA) or excessive permissioning and unreliable security posture management contribute to sensitive data exposure through misconfigurations.

- Insider Threat Attacks: Current or former employees may unintentionally or intentionally target data, posing risks to organizational security and integrity.

External Causes of Sensitive Data Exposure

- SQL Injection: SQL Injection happens when attackers introduce malicious queries and SQL blocks into server requests. This lets them tamper with backend queries to retrieve or alter data, causing SQL injection attacks.

- Network Compromise: A network compromise occurs when unauthorized users gain control of backend services or servers. This compromises network integrity, risking resource theft or data alteration.

- Phishing Attacks: Phishing attacks contain malicious links. They exploit urgency, tricking recipients into disclosing sensitive information like login credentials or personal details.

- Supply Chain Attacks: When compromised, Third-party service providers or vendors exploit the dependent systems and unintentionally expose sensitive data publicly.

Impact of Sensitive Data Exposure

Exposing sensitive data poses significant risks. It encompasses private details like health records, user credentials, and biometric data. Accountability, governed by acts like the Accountability Act, mandates organizations to safeguard granular user information. Failure to prevent unauthorized exposure can result in severe consequences. This can include identity theft and compromised user privacy. It can also lead to regulatory and legal repercussions and potential corruption of databases and infrastructure. Organizations must focus on stringent measures to mitigate these risks.

.jpeg)

Examples of Sensitive Data Exposure

Prominent companies, including Atlassian, LinkedIn, and Dubsmash, have unfortunately become notable examples of sensitive data exposure incidents. Analyzing these cases provides insights into the causes and repercussions of such data exposure. It offers valuable lessons for enhancing data security measures.

Atlassian Jira (2019)

In 2019, Atlassian Jira, a project management tool, experienced significant data exposure. The exposure resulted from a configuration error. A misconfiguration in global permission settings allowed unauthorized access to sensitive information. This included names, email addresses, project details, and assignee data. The issue originated from incorrect permissions granted during the setup of filters and dashboards in JIRA.

LinkedIn (2021)

LinkedIn, a widely used professional social media platform, experienced a data breach where approximately 92% of user data was extracted through web scraping. The security incident was attributed to insufficient webpage protection and the absence of effective mechanisms to prevent web crawling activity.

Equifax (2017)

In 2017, Equifax Ltd., the UK affiliate of credit reporting company Equifax Inc., faced a significant data breach. Hackers infiltrated Equifax servers in the US, impacting over 147 million individuals, including 13.8 million UK users. Equifax failed to meet security obligations. It outsourced security management to its US parent company. This led to the exposure of sensitive data such as names, addresses, phone numbers, dates of birth, Equifax membership login credentials, and partial credit card information.

Cost of Compliance Fines

Data exposure poses significant risks, whether at rest or in transit. Attackers target various dimensions of sensitive information. This includes protected health data, biometrics for AI systems, and personally identifiable information (PII). Compliance costs are subject to multiple factors influenced by shifting regulatory landscapes. This is true regardless of the stage.

Enterprises failing to safeguard data face substantial monetary fines or imprisonment. The penalty depends on the impact of the exposure. Fines can range from millions to billions, and compliance costs involve valuable resources and time. Thus, safeguarding sensitive data is imperative for mitigating reputation loss and upholding industry standards.

How to Determine if You Are Vulnerable to Sensitive Data Exposure?

Detecting security vulnerabilities in the vast array of threats to sensitive data is a challenging task. Unauthorized access often occurs due to lax data classification and insufficient access controls. Enterprises must adopt additional measures to assess their vulnerability to data exposure.

Deep scans, validating access levels, and implementing robust monitoring are crucial steps. Detecting unusual access patterns is crucial. In addition, using advanced reporting systems to swiftly detect anomalies and take preventive measures in case of a breach is an effective strategy. It proactively safeguards sensitive data.

Automation is key as well - to allow burdened security teams the ability to keep pace with dynamic cloud use and data proliferation. Automating discovery and classification, freeing up resources, and doing so in a highly autonomous manner without requiring huge setup and configuration efforts can greatly help.

How to Prevent Sensitive Data Exposure

Effectively managing sensitive data demands rigorous preventive measures to avert exposure. Widely embraced as best practices, these measures serve as a strategic shield against breaches. The following points focus on specific areas of vulnerability. They offer practical solutions to either eliminate potential sensitive data exposures or promptly respond to them:

Assess Risks Associated with Data

The initial stages of data and access onboarding serve as gateways to potential exposure. Conducting a thorough assessment, continual change monitoring, and implementing stringent access controls for critical assets significantly reduces the risks of sensitive data exposure. This proactive approach marks the first step to achieving a strong data security posture.

Minimize Data Surface Area

Overprovisioning and excessive sharing create complexities. This turns issue isolation, monitoring, and maintenance into challenges. Without strong security controls, every part of the environment, platform, resources, and data transactions poses security risks. Opting for a less-is-more approach is ideal. This is particularly true when dealing with sensitive information like protected health data and user credentials. By minimizing your data attack surface, you mitigate the risk of cloud data leaks.

Store Passwords Using Salted Hashing Functions and Leverage MFA

Securing databases, portals, and services hinges on safeguarding passwords. This prevents unauthorized access to sensitive data. It is crucial to handle password protection and storage with precision. Use advanced hashing algorithms for encryption and decryption. Adding an extra layer of security through multi-factor authentication strengthens the defense against potential breaches even more.

Disable Autocomplete and Caching

Cached data poses significant vulnerabilities and risks of data breaches. Enterprises often use auto-complete features, requiring the storage of data on local devices for convenient access. Common instances include passwords stored in browser sessions and cache. In cloud environments, attackers exploit computing instances. They access sensitive cloud data by exploiting instances where data caching occurs. Mitigating these risks involves disabling caching and auto-complete features in applications. This effectively prevents potential security threats.

Fast and Effective Breach Response

Instances of personal data exposure stemming from threats like man-in-the-middle and SQL injection attacks necessitate swift and decisive action. External data exposure carries a heightened impact compared to internal incidents. Combatting data breaches demands a responsive approach. It's often facilitated by widely adopted strategies. These include Data Detection and Response (DDR), Security Orchestration, Automation, and Response (SOAR), User and Entity Behavior Analytics (UEBA), and the renowned Zero Trust Architecture featuring Predictive Analytics (ZTPA).

Tools to Prevent Sensitive Data Exposure

Shielding sensitive information demands a dual approach—internally and externally. Unauthorized access can be prevented through vigilant monitoring, diligent analysis, and swift notifications to both security teams and affected users. Effective tools, whether in-house or third-party, are indispensable in preventing data exposure.

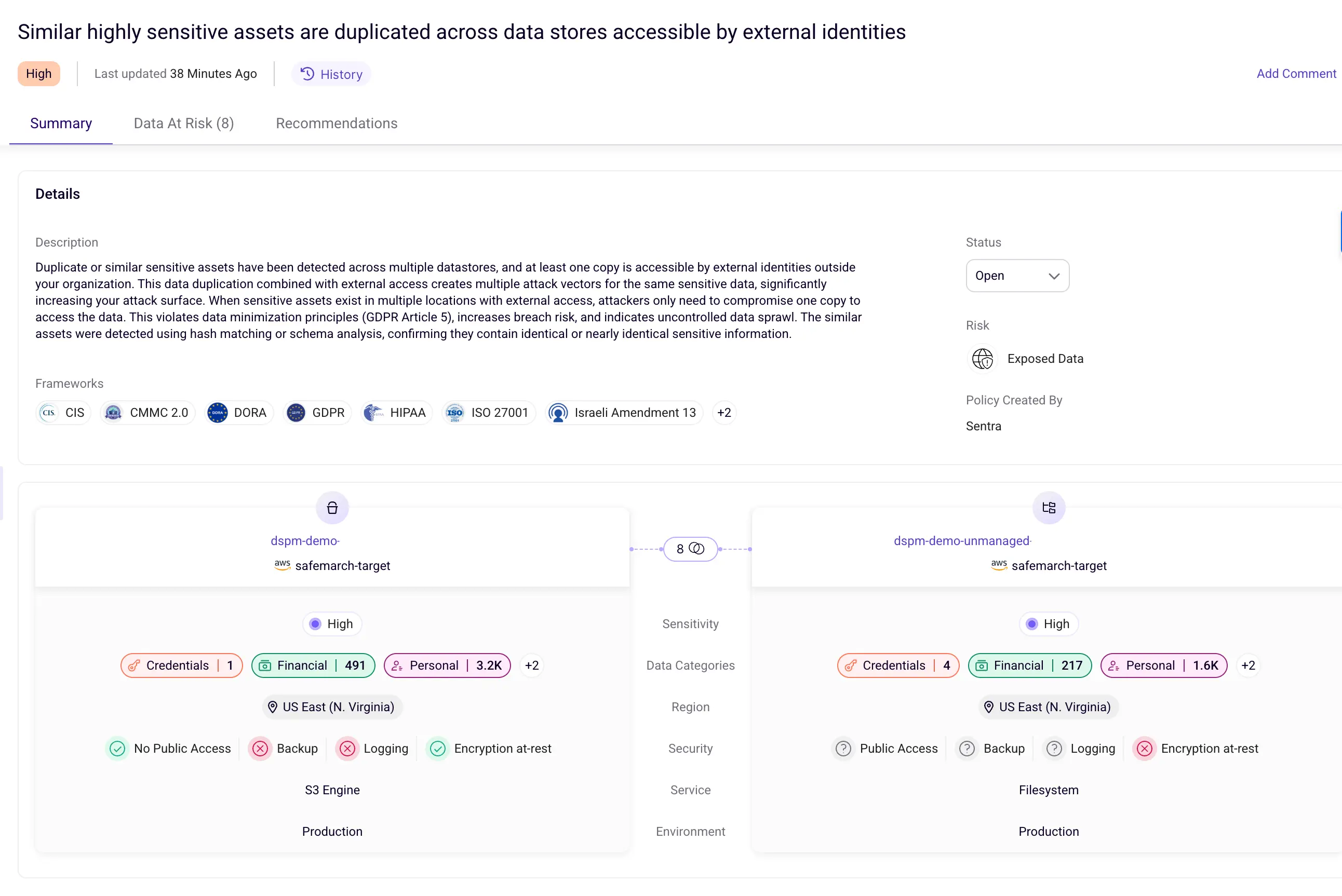

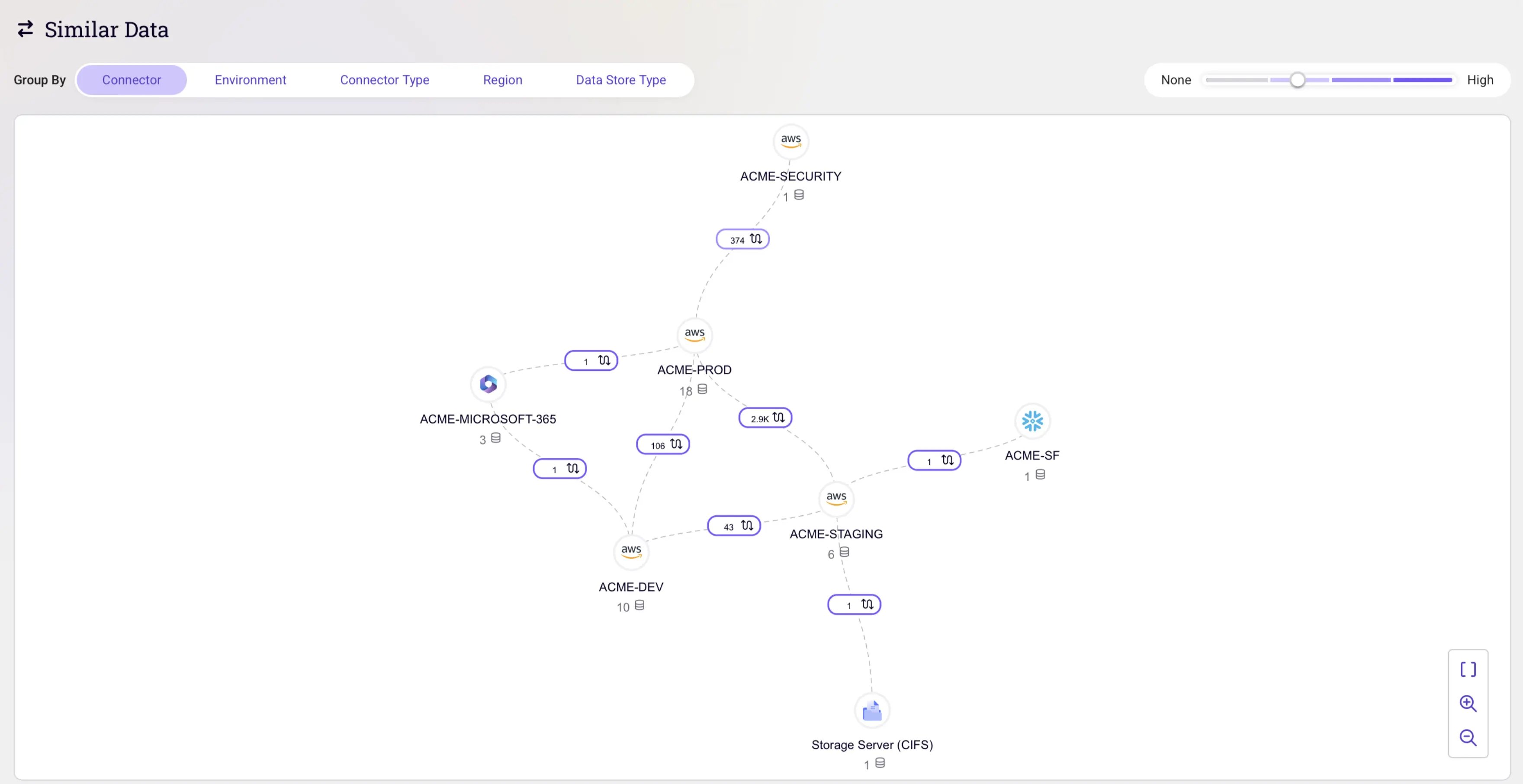

Data Security Posture Management (DSPM) is designed to meet the changing requirements of security, ensuring a thorough and meticulous approach to protecting sensitive data. Tools compliant with DSPM standards usually feature data tokenization and masking, seamlessly integrated into their services. This ensures that data transmission and sharing remains secure.

These tools also often have advanced security features. Examples include detailed access controls, specific access patterns, behavioral analysis, and comprehensive logging and monitoring systems. These features are essential for identifying and providing immediate alerts about any unusual activities or anomalies.

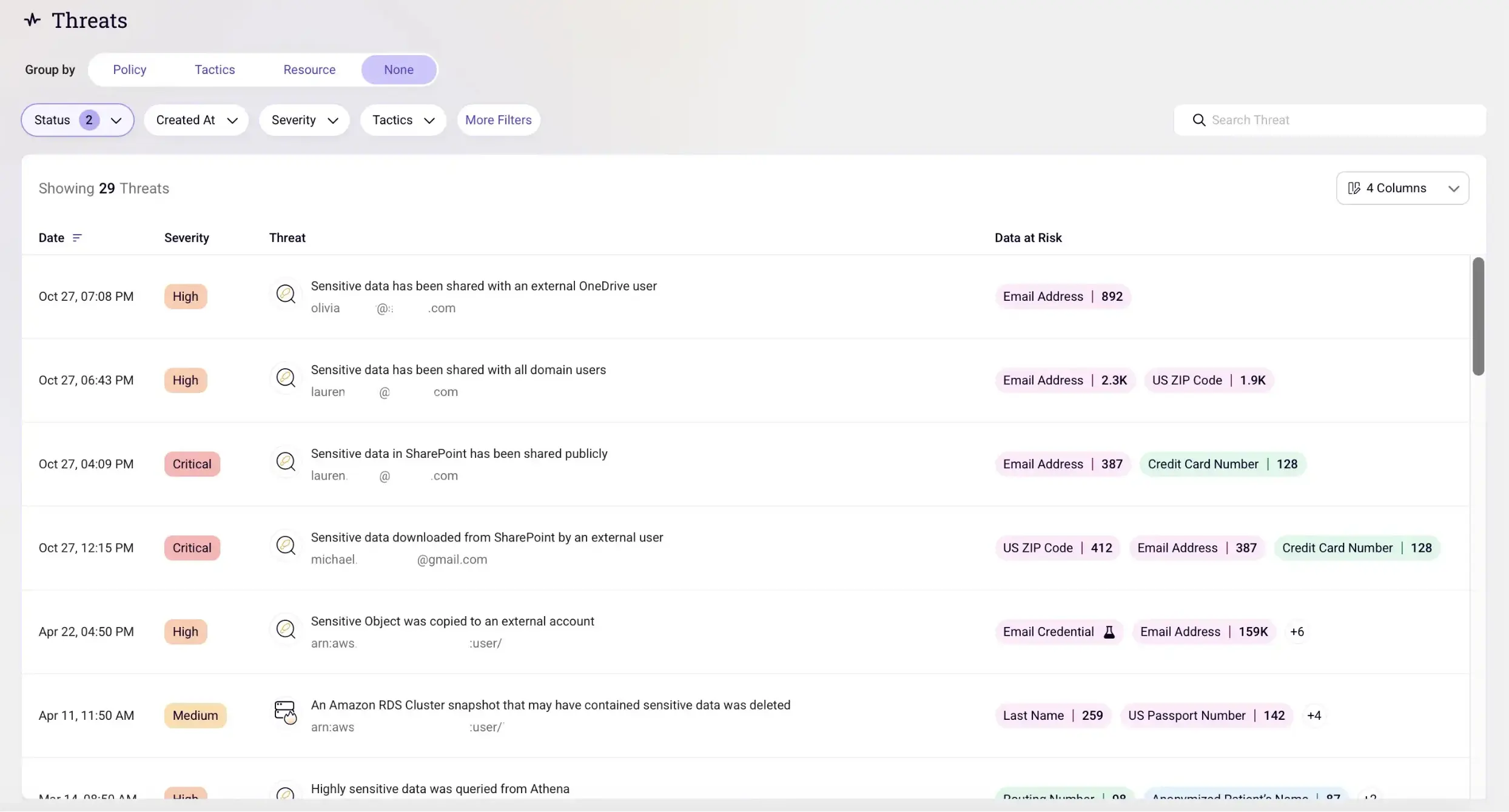

Sentra emerges as an optimal solution, boasting sophisticated data discovery and classification capabilities. It continuously evaluates data security controls and issues automated notifications. This addresses critical data vulnerabilities ingrained in its core.

Conclusion

In the era of cloud transformation and digital adoption, data emerges as the driving force behind innovations. Personal Identifiable Information (PII), which is a specific type of sensitive data, is crucial for organizations to deliver personalized offerings that cater to user preferences. The value inherent in data, both monetarily and personally, places it at the forefront, and attackers continually seek opportunities to exploit enterprise missteps.

Failure to adopt secure access and standard security controls by data-holding enterprises can lead to sensitive data exposure. Unaddressed, this vulnerability becomes a breeding ground for data breaches and system compromises. Elevating enterprise security involves implementing data security posture management and deploying robust security controls. Advanced tools with built-in data discovery and classification capabilities are essential to this success. Stringent security protocols fortify the tools, safeguarding data against vulnerabilities and ensuring the resilience of business operations.

If you want to learn more about how you can prevent sensitive data exposure, request a demo with our data security experts today.

<blogcta-big>