AI in Data Security: Guardian Angel or Trojan Horse?

Artificial intelligence (AI) is transforming industries, empowering companies to achieve greater efficiency, and maintain a competitive edge. But here’s the catch: although AI unlocks unprecedented opportunities, its rapid adoption also introduces complex challenges—especially for data security and privacy.

How do you accelerate transformation without compromising the integrity of your data? How do you harness AI’s power without it becoming a threat?

For security leaders, AI presents this very paradox. It is a powerful tool for mitigating risk through better detection of sensitive data, more accurate classification, and real-time response. However, it also introduces complex new risks, including expanded attack surfaces, sophisticated threat vectors, and compliance challenges.

As AI becomes ubiquitous and enterprise data systems become increasingly distributed, organizations must navigate the complexities of the big-data AI era to scale AI adoption safely.

In this article, we explore the emerging challenges of using AI in data security and offer practical strategies to help organizations secure sensitive data.

The Emerging Challenges for Data Security with AI

AI-driven systems are driven by vast amounts of data, but this reliance introduces significant security risks—both from internal AI usage and external client-side AI applications. As organizations integrate AI deeper into their operations, security leaders must recognize and mitigate the growing vulnerabilities that come with it.

Below, we outline the four biggest AI security challenges that will shape how you protect data and how you can address them.

1. Expanded Attack Surfaces

AI’s dependence on massive datasets—often unstructured and spread across cloud environments—creates an expansive attack surface. This data sprawl increases exposure to adversarial threats, such as model inversion attacks, where bad actors can reverse-engineer AI models to extract sensitive attributes or even re-identify anonymized data.

To put this in perspective, an AI system trained on healthcare data could inadvertently leak protected health information (PHI) if improperly secured. As adversaries refine their techniques, protecting AI models from data leakage must be a top priority.

For a detailed analysis of this challenge, refer to NIST’s report, “Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations.”

2. Sophisticated and Evolving Threat Landscape

The same AI advancements that enable organizations to improve detection and response are also empowering threat actors. Attackers are leveraging AI to automate and enhance malicious campaigns, from highly targeted phishing attacks to AI-generated malware and deepfake fraud.

According to StrongDM's “The State of AI in Cybersecurity Report,” 65% of security professionals believe their organizations are unprepared for AI-driven threats. This highlights a critical gap: while AI-powered defenses continue to improve, attackers are innovating just as fast—if not faster. Organizations must adopt AI-driven security tools and proactive defense strategies to keep pace with this rapidly evolving threat landscape.

3. Data Privacy and Compliance Risks

AI’s reliance on large datasets introduces compliance risks for organizations bound by regulations such as GDPR, CCPA, or HIPAA. Improper handling of sensitive data within AI models can lead to regulatory violations, fines, and reputational damage. One of the biggest challenges is AI’s opacity—in many cases, organizations lack full visibility into how AI systems process, store, and generate insights from data. This makes it difficult to prove compliance, implement effective governance, or ensure that AI applications don’t inadvertently expose personally identifiable information (PII). As regulatory scrutiny on AI increases, businesses must prioritize AI-specific security policies and governance frameworks to mitigate legal and compliance risks.

4. Risk of Unintentional Data Exposure

Even without malicious intent, generative AI models can unintentionally leak sensitive or proprietary data. For instance, employees using AI tools may unknowingly input confidential information into public models, which could then become part of the model’s training data and later be disclosed through the model’s outputs. Generative AI models—especially large language models (LLMs)—are particularly susceptible to data extrapolation attacks, where adversaries manipulate prompts to extract hidden information.

Techniques like “divergence attacks” on ChatGPT can expose training data, including sensitive enterprise knowledge or personally identifiable information. The risks are real, and the pace of AI adoption makes data security awareness across the organization more critical than ever.

For further insights, explore our analysis of “Emerging Data Security Challenges in the LLM Era.”

Top 5 Strategies for Securing Your Data with AI

To integrate AI responsibly into your security posture, companies today need a proactive approach is essential. Below we outline five key strategies to maximize AI’s benefits while mitigating the risks posed by evolving threats. When implemented holistically, these strategies will empower you to leverage AI’s full potential while keeping your data secure.

1. Data Minimization, Masking, and Encryption

The most effective way to reduce risk exposure is by minimizing sensitive data usage whenever possible. Avoid storing or processing sensitive data unless absolutely necessary. Instead, use techniques like synthetic data generation and anonymization to replace sensitive values during AI training and analysis.

When sensitive data must be retained, data masking techniques—such as name substitution or data shuffling—help protect confidentiality while preserving data utility. However, if data must remain intact, end-to-end encryption is critical. Encrypt data both in transit and at rest, especially in cloud or third-party environments, to prevent unauthorized access.

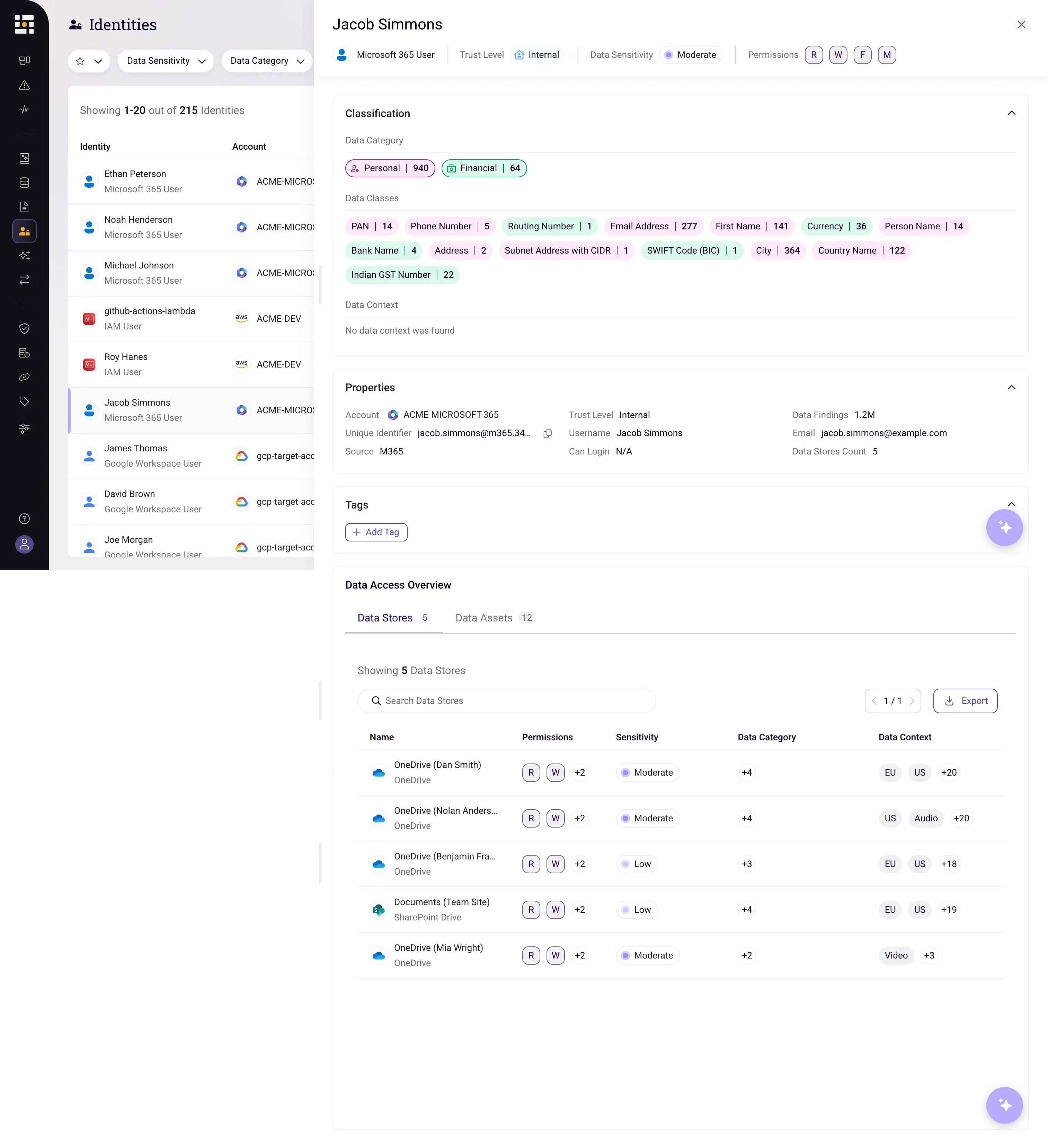

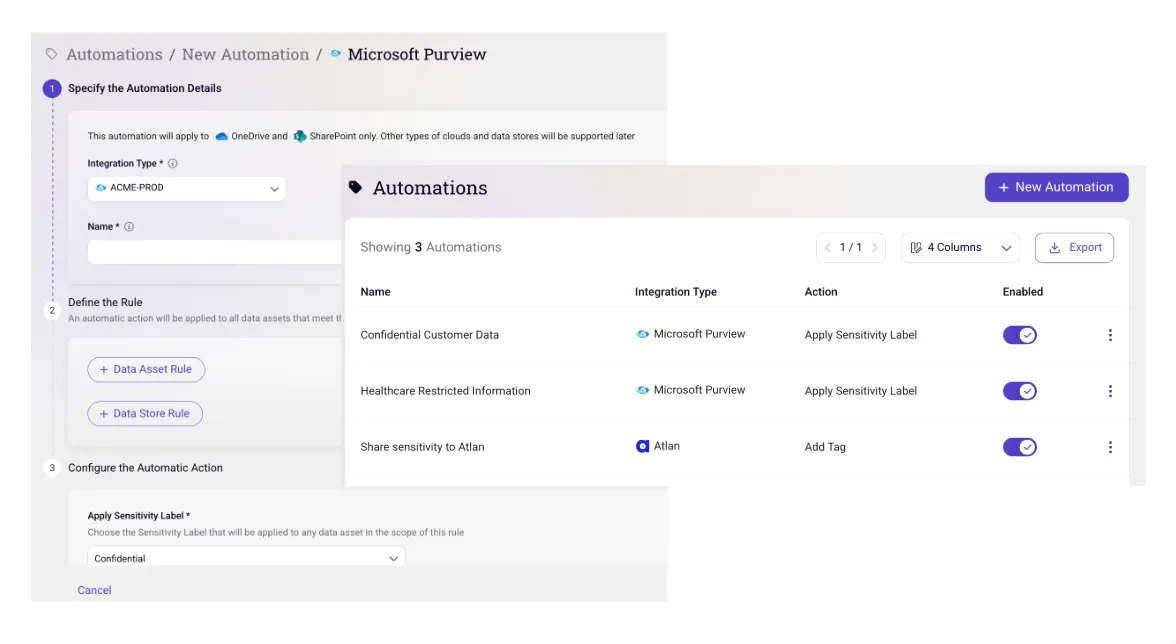

2. Data Governance and Compliance with AI-SPM

Governance and compliance frameworks must evolve to account for AI-driven data processing. AI Security Posture Management (AI-SPM) tools help automate compliance monitoring and enforce governance policies across hybrid and cloud environments.

AI-SPM tools enable:

- Automated data lineage mapping to track how sensitive data flows through AI systems.

- Proactive compliance monitoring to flag data access violations and regulatory risks before they become liabilities.

By integrating AI-SPM into your security program, you ensure that AI-powered workflows remain compliant, transparent, and properly governed throughout their lifecycle.

3. Secure Use of AI Cloud Tools

AI cloud tools accelerate AI adoption, but they also introduce unique security risks. Whether you’re developing custom models or leveraging pre-trained APIs, choosing trusted providers like Amazon Bedrock or Google’s Vertex AI ensures built-in security protections.

However, third-party security is not a substitute for internal controls. To safeguard sensitive workloads, your organization should:

- Implement strict encryption policies for all AI cloud interactions.

- Enforce data isolation to prevent unauthorized access.

- Regularly review vendor agreements and security guarantees to ensure compliance with internal policies.

Cloud AI tools can enhance your security posture, but always review the guarantees of your AI providers (e.g., OpenAI's security and privacy page) and regularly review vendor agreements to ensure alignment with your company’s security policies.

4. Risk Assessments and Red Team Testing

While offline assessments provide an initial security check, AI models behave differently in live environments—introducing unpredictable risks. Continuous risk assessments are critical for detecting vulnerabilities, including adversarial threats and data leakage risks.

Additionally, red team exercises simulate real-world AI attacks before threat actors can exploit weaknesses. A proactive testing cycle ensures AI models remain resilient against emerging threats.

To maintain AI security over time, adopt a continuous feedback loop—incorporating lessons learned from each assessment to strengthen your AI systems

5. Organization-Wide AI Usage Guidelines

AI security isn’t just a technical challenge—it’s an organizational imperative. To democratize AI security, companies must embed AI risk awareness across all teams.

- Establish clear AI usage policies based on zero trust and least privilege principles.

- Define strict guidelines for data sharing with AI platforms to prevent shadow AI risks.

- Integrate AI security into broader cybersecurity training to educate employees on emerging AI threats.

By fostering a security-first culture, organizations can mitigate AI risks at scale and ensure that security teams, developers, and business leaders align on responsible AI practices.

Key Takeaways: Moving Towards Proactive AI Security

AI is transforming how we manage and protect data, but it also introduces new risks that demand ongoing vigilance. By taking a proactive, security-first approach, you can stay ahead of AI-driven threats and build a resilient, future-ready AI security framework.

AI integration is no longer optional for modern enterprises—it is both inevitable and transformative. While AI offers immense potential, particularly in security applications, it also introduces significant risks, especially around data security. Organizations that fail to address these challenges proactively risk increased exposure to evolving threats, compliance failures, and operational disruptions.

By implementing strategies such as data minimization, strong governance, and secure AI adoption, organizations can mitigate these risks while leveraging AI’s full potential. A proactive security approach ensures that AI enhances—not compromises—your overall cybersecurity posture. As AI-driven threats evolve, investing in comprehensive, AI-aware security measures is not just a best practice but a competitive necessity. Sentra’s Data Security Platform provides the necessary visibility and control, integrating advanced AI security capabilities to protect sensitive data across distributed environments.

To learn how Sentra can strengthen your organization’s AI security posture with continuous discovery, automated classification, threat monitoring, and real-time remediation, request a demo today.

<blogcta-big>

.webp)

.webp)

.webp)