Best Practices: Automatically Tag and Label Sensitive Data

The Importance of Data Labeling and Tagging

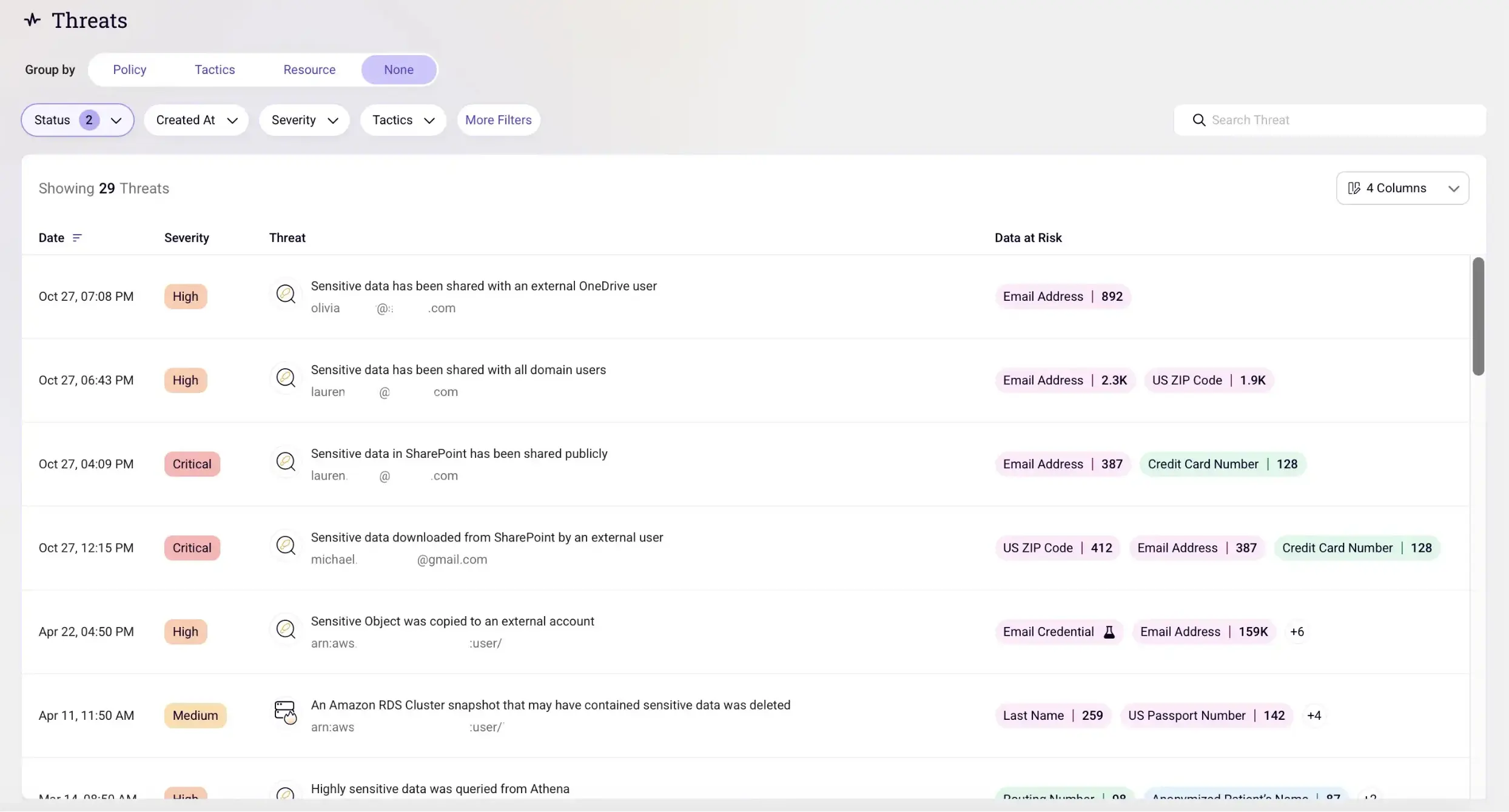

In today's fast-paced business environment, data rarely stays in one place. It moves across devices, applications, and services as individuals collaborate with internal teams and external partners. This mobility is essential for productivity but poses a challenge: how can you ensure your data remains secure and compliant with business and regulatory requirements when it's constantly on the move?

Why Labeling and Tagging Data Matters

Data labeling and tagging provide a critical solution to this challenge. By assigning sensitivity labels to your data, you can define its importance and security level within your organization. These labels act as identifiers that abstract the content itself, enabling you to manage and track the data type without directly exposing sensitive information. With the right labeling, organizations can also control access in real-time.

For example, labeling a document containing social security numbers or credit card information as Highly Confidential allows your organization to acknowledge the data's sensitivity and enforce appropriate protections, all without needing to access or expose the actual contents.

Why Sentra’s AI-Based Classification Is a Game-Changer

Sentra’s AI-based classification technology enhances data security by ensuring that the sensitivity labels are applied with exceptional accuracy. Leveraging advanced LLM models, Sentra enhances data classification with context-aware capabilities, such as:

- Detecting the geographic residency of data subjects.

- Differentiating between Customer Data and Employee Data.

- Identifying and treating Synthetic or Mock Data differently from real sensitive data.

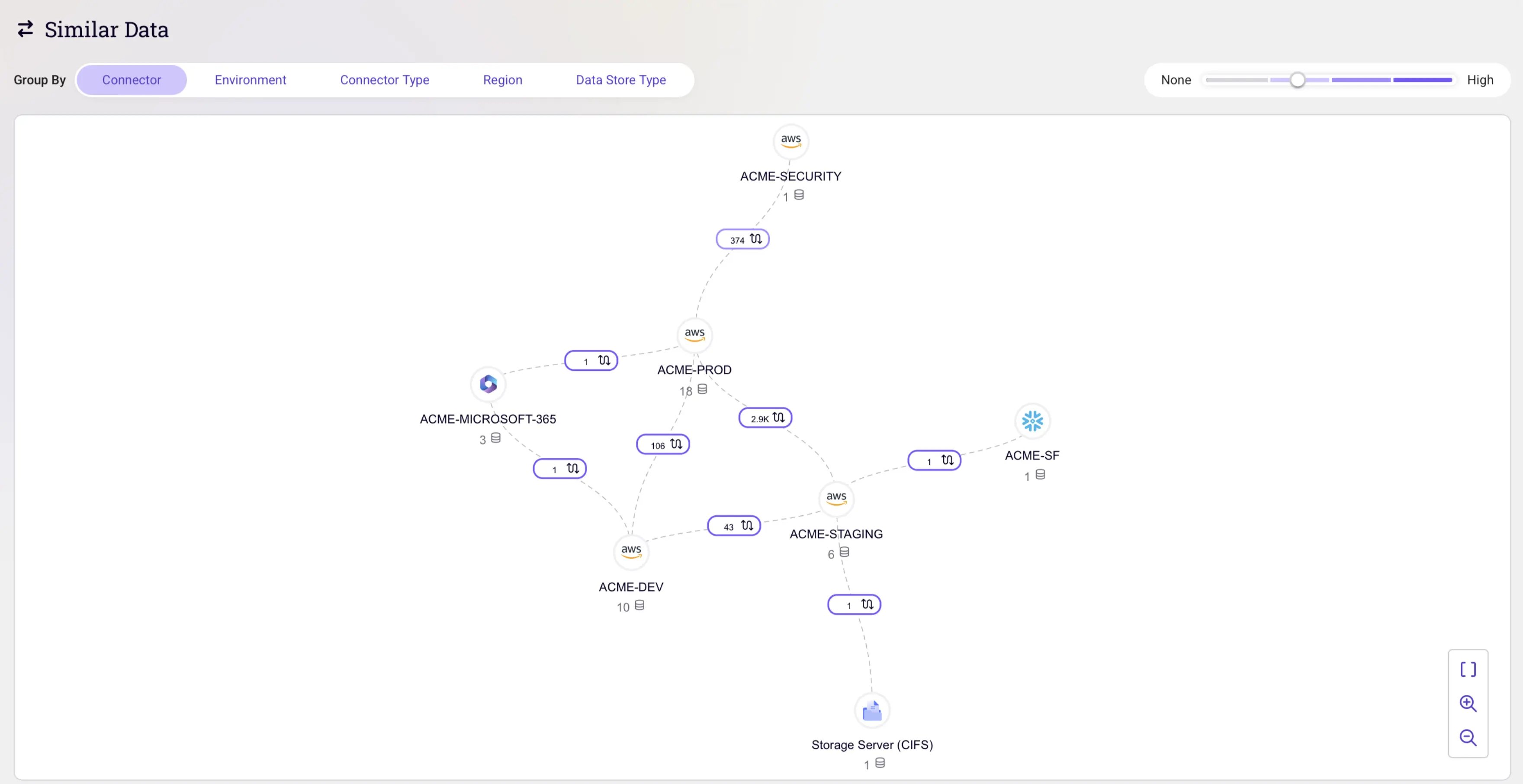

This context-based approach eliminates the inefficiencies of manual processes and seamlessly scales to meet the demands of modern, complex data environments. By integrating AI into the classification process, Sentra empowers teams to confidently and consistently protect their data—ensuring sensitive information remains secure, no matter where it resides or how it is accessed.

Benefits of Labeling and Tagging in Sentra

Sentra enhances your ability to classify and secure data by automatically applying sensitivity labels to data assets. By automating this process, Sentra removes the manual effort required from each team member—achieving accuracy that’s only possible through a deep understanding of what data is sensitive and its broader context.

Here are some key benefits of labeling and tagging in Sentra:

- Enhanced Security and Loss Prevention: Sentra’s integration with Data Loss Prevention (DLP) solutions prevents the loss of sensitive and critical data by applying the right sensitivity labels. Sentra’s granular, contextual tags help to provide the detail necessary to action remediation automatically so that operations can scale.

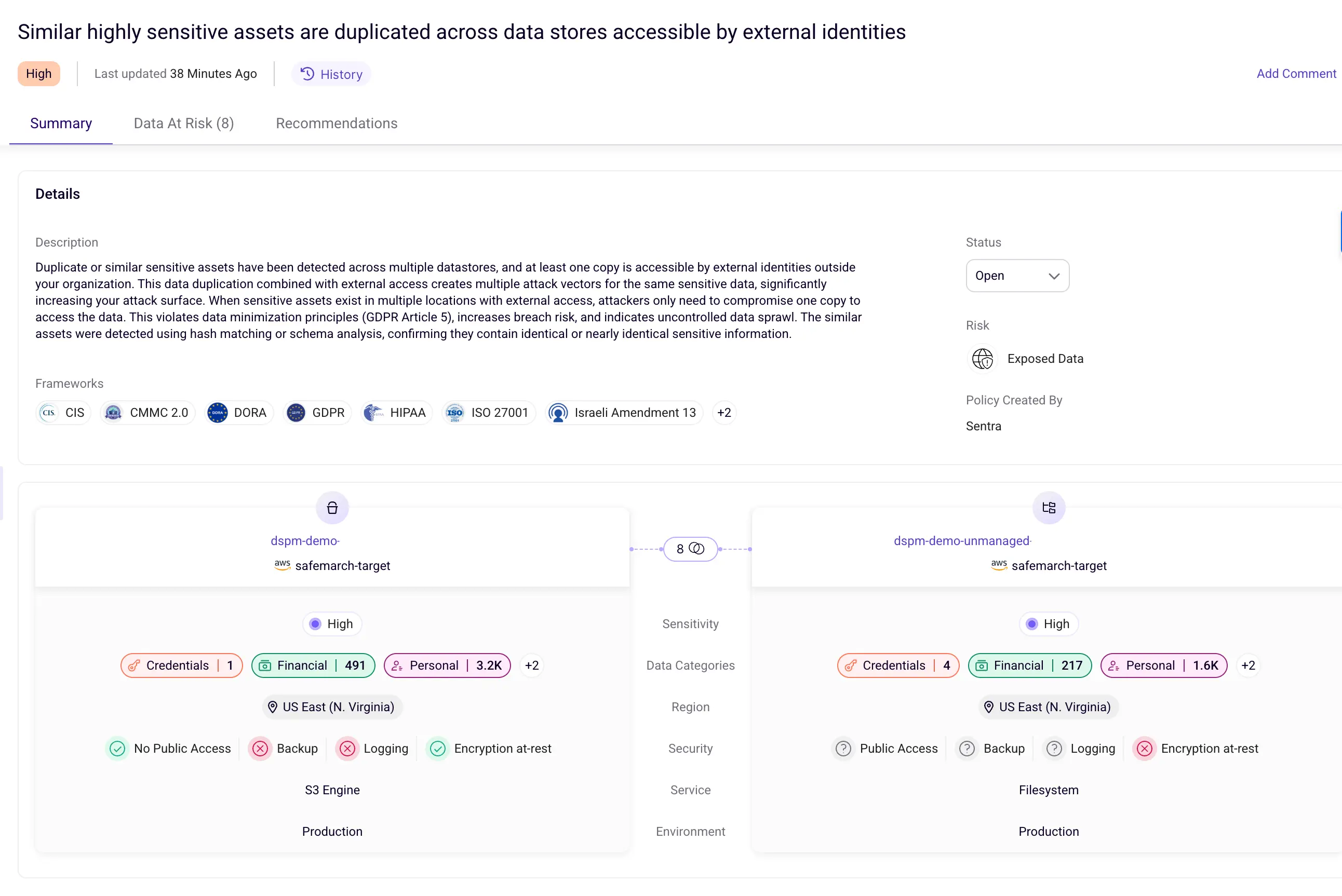

- Easily Build Your Tagging Rules: Sentra’s Intuitive Rule Builder allows you to automatically apply sensitivity labels to assets based on your pre-existing tagging rules and or define new ones via the builder UI (see screen below). Sentra imports discovered Microsoft Purview Information Protection (MPIP) labels to speed this process.

- Labels Move with the Data: Sensitivity labels created in Sentra can be mapped to Microsoft Purview Information Protection (MPIP) labels and applied to various applications like SharePoint, OneDrive, Teams, Amazon S3, and Azure Blob Containers. Once applied, labels are stored as metadata and travel with the file or data wherever it goes, ensuring consistent protection across platforms and services.

- Automatic Labeling: Sentra allows for the automatic application of sensitivity labels based on the data's content. Auto-tagging rules, configured for each sensitivity label, determine which label should be applied during scans for sensitive information.

- Support for Structured and Unstructured Data: Sentra enables labeling for files stored in cloud environments such as Amazon S3 or EBS volumes and for database columns in structured data environments like Amazon RDS. By implementing these labeling practices, your organization can track, manage, and protect data with ease while maintaining compliance and safeguarding sensitive information. Whether collaborating across services or storing data in diverse cloud environments, Sentra ensures your labels and protection follow the data wherever it goes.

Applying Sensitivity Labels to Data Assets in Sentra

%2520image%2520for%2520blog%2520(1).webp)

In today’s rapidly evolving data security landscape, ensuring that your data is properly classified and protected is crucial. One effective way to achieve this is by applying sensitivity labels to your data assets. Sensitivity labels help ensure that data is handled according to its level of sensitivity, reducing the risk of accidental exposure and enabling compliance with data protection regulations.

Below, we’ll walk you through the necessary steps to automatically apply sensitivity labels to your data assets in Sentra. By following these steps, you can enhance your data governance, improve data security, and maintain clear visibility over your organization's sensitive information.

The process involves three key actions:

- Create Sensitivity Labels: The first step in applying sensitivity labels is creating them within Sentra. These labels allow you to categorize data assets according to various rules and classifications. Once set up, these labels will automatically apply to data assets based on predefined criteria, such as the types of classifications detected within the data. Sensitivity labels help ensure that sensitive information is properly identified and protected.

- Connect Accounts with Data Assets: The next step is to connect your accounts with the relevant data assets. This integration allows Sentra to automatically discover and continuously scan all your data assets, ensuring that no data goes unnoticed. As new data is created or modified, Sentra will promptly detect and categorize it, keeping your data classification up to date and reducing manual efforts.

- Apply Classification Tags: Whenever a data asset is scanned, Sentra will automatically apply classification tags to it, such as data classes, data contexts, and sensitivity labels. These tags are visible in Sentra’s data catalog, giving you a comprehensive overview of your data’s classification status. By applying these tags consistently across all your data assets, you’ll have a clear, automated way to manage sensitive data, ensuring compliance and security.

By following these steps, you can streamline your data classification process, making it easier to protect your sensitive information, improve your data governance practices, and reduce the risk of data breaches.

Applying MPIP Labels

In order to apply Microsoft Purview Information Protection (MPIP) labels based on Sentra sensitivity labels, you are required to follow a few additional steps:

- Set up the Microsoft Purview integration - which will allow Sentra to import and sync MPIP sensitivity labels.

- Create tagging rules - which will allow you to map Sentra sensitivity labels to MPIP sensitivity labels (for example “Very Confidential” in Sentra would be mapped to “ACME - Highly Confidential” in MPIP), and choose to which services this rule would apply (for example, Microsoft 365 and Amazon S3).

Using Sensitivity Labels in Microsoft DLP

Microsoft Purview DLP (as well as all other industry-leading DLP solutions) supports MPIP labels in its policies so admins can easily control and prevent data loss of sensitive data across multiple services and applications.For instance, a MPIP ‘highly confidential’ label may instruct Microsoft Purview DLP to restrict transfer of sensitive data outside a certain geography. Likewise, another similar label could instruct that confidential intellectual property (IP) is not allowed to be shared within Teams collaborative workspaces. Labels can be used to help control access to sensitive data as well. Organizations can set a rule with read permission only for specific tags. For example, only production IAM roles can access production files. Further, for use cases where data is stored in a single store, organizations can estimate the storage cost for each specific tag.

Build a Stronger Foundation with Accurate Data Classification

Effectively tagging sensitive data unlocks significant benefits for organizations, driving improvements across accuracy, efficiency, scalability, and risk management. With precise classification exceeding 95% accuracy and minimal false positives, organizations can confidently label both structured and unstructured data. Automated tagging rules reduce the reliance on manual effort, saving valuable time and resources. Granular, contextual tags enable confident and automated remediation, ensuring operations can scale seamlessly. Additionally, robust data tagging strengthens DLP and compliance strategies by fully leveraging Microsoft Purview’s capabilities. By streamlining these processes, organizations can consistently label and secure data across their entire estate, freeing resources to focus on strategic priorities and innovation.

<blogcta-big>

.webp)