Cloud environments evolve continuously - new workloads, APIs, identities, and services are deployed every day. This constant change introduces security gaps that attackers can exploit if left unmanaged.

Cloud vulnerability management helps organizations identify, prioritize, and remediate security weaknesses across cloud infrastructure, workloads, and services to reduce breach risk, protect sensitive data, and maintain compliance.

This guide explains what cloud vulnerability management is, why it matters in 2026, common cloud vulnerabilities, best practices, tools, and more.

What is Cloud Vulnerability Management?

Cloud vulnerability management is a proactive approach to identifying and mitigating security vulnerabilities within your cloud infrastructure, enhancing cloud data security. It involves the systematic assessment of cloud resources and applications to pinpoint potential weaknesses that cybercriminals might exploit. By addressing these vulnerabilities, you reduce the risk of data breaches, service interruptions, and other security incidents that could have a significant impact on your organization.

Why Cloud Vulnerability Management Matters in 2026

Cloud vulnerability management matters in 2026 because cloud environments are more dynamic, interconnected, and data-driven than ever before, making traditional, periodic security assessments insufficient. Modern cloud infrastructure changes continuously as teams deploy new workloads, APIs, and services across multi-cloud and hybrid environments. Each change can introduce new security vulnerabilities, misconfigurations, or exposed attack paths that attackers can exploit within minutes.

Several trends are driving the increased importance of cloud vulnerability management in 2026:

- Accelerated cloud adoption: Organizations continue to move critical workloads and sensitive data into IaaS, PaaS, and SaaS environments, significantly expanding the attack surface.

- Misconfigurations remain the leading risk: Over-permissive access policies, exposed storage services, and insecure APIs are still the most common causes of cloud breaches.

- Shorter attacker dwell time: Threat actors now exploit newly exposed vulnerabilities within hours, not weeks, making continuous vulnerability scanning essential.

- Increased regulatory pressure: Compliance frameworks such as GDPR, HIPAA, SOC 2, and emerging AI and data regulations require continuous risk assessment and documentation.

- Data-centric breach impact: Cloud breaches increasingly focus on accessing sensitive data rather than infrastructure alone, raising the stakes of unresolved vulnerabilities.

In this environment, cloud vulnerability management best practices, including continuous scanning, risk-based prioritization, and automated remediation - are no longer optional. They are a foundational requirement for maintaining cloud security, protecting sensitive data, and meeting compliance obligations in 2026.

Common Vulnerabilities in Cloud Security

Before diving into the details of cloud vulnerability management, it's essential to understand the types of vulnerabilities that can affect your cloud environment. Here are some common vulnerabilities that private cloud security experts encounter:

Vulnerable APIs

Application Programming Interfaces (APIs) are the backbone of many cloud services. They allow applications to communicate and interact with the cloud infrastructure. However, if not adequately secured, APIs can be an entry point for cyberattacks. Insecure API endpoints, insufficient authentication, and improper data handling can all lead to vulnerabilities.

# Insecure API endpoint example

import requests

response = requests.get('https://example.com/api/v1/insecure-endpoint')

if response.status_code == 200:

# Handle the response

else:

# Report an error

Misconfigurations

Misconfigurations are one of the leading causes of security breaches in the cloud. These can range from overly permissive access control policies to improperly configured firewall rules. Misconfigurations may leave your data exposed or allow unauthorized access to resources.

# Misconfigured firewall rule

- name: allow-http

sourceRanges:

- 0.0.0.0/0 # Open to the world

allowed:

- IPProtocol: TCP

ports:

- '80'

Data Theft or Loss

Data breaches can result from poor data handling practices, encryption failures, or a lack of proper data access controls. Stolen or compromised data can lead to severe consequences, including financial losses and damage to an organization's reputation.

// Insecure data handling example

import java.io.File;

import java.io.FileReader;

public class InsecureDataHandler {

public String readSensitiveData() {

try {

File file = new File("sensitive-data.txt");

FileReader reader = new FileReader(file);

// Read the sensitive data

reader.close();

} catch (Exception e) {

// Handle errors

}

}

}

Poor Access Management

Inadequate access controls can lead to unauthorized users gaining access to your cloud resources. This vulnerability can result from over-privileged user accounts, ineffective role-based access control (RBAC), or lack of multi-factor authentication (MFA).

# Overprivileged user account

- members:

- user:johndoe@example.com

role: roles/editor

Non-Compliance

Non-compliance with regulatory standards and industry best practices can lead to vulnerabilities. Failing to meet specific security requirements can result in fines, legal actions, and a damaged reputation.

Non-compliance with GDPR regulations can lead to severe financial penalties and legal consequences.

Understanding these vulnerabilities is crucial for effective cloud vulnerability management. Once you can recognize these weaknesses, you can take steps to mitigate them.

Cloud Vulnerability Assessment and Mitigation

Now that you're familiar with common cloud vulnerabilities, it's essential to know how to mitigate them effectively. Mitigation involves a combination of proactive measures to reduce the risk and the potential impact of security issues.

Here are some steps to consider:

- Regular Cloud Vulnerability Scanning: Implement a robust vulnerability scanning process that identifies and assesses vulnerabilities within your cloud environment. Use automated tools that can detect misconfigurations, outdated software, and other potential weaknesses.

- Access Control: Implement strong access controls to ensure that only authorized users have access to your cloud resources. Enforce the principle of least privilege, providing users with the minimum level of access necessary to perform their tasks.

- Configuration Management: Regularly review and update your cloud configurations to ensure they align with security best practices. Tools like Infrastructure as Code (IaC) and Configuration Management Databases (CMDBs) can help maintain consistency and security.

- Patch Management: Keep your cloud infrastructure up to date by applying patches and updates promptly. Vulnerabilities in the underlying infrastructure can be exploited by attackers, so staying current is crucial.

- Encryption: Use encryption to protect data both at rest and in transit. Ensure that sensitive information is adequately encrypted, and use strong encryption protocols and algorithms.

- Monitoring and Incident Response: Implement comprehensive monitoring and incident response capabilities to detect and respond to security incidents in real time. Early detection can minimize the impact of a breach.

- Security Awareness Training: Train your team on security best practices and educate them about potential risks and how to identify and report security incidents.

Key Features of Cloud Vulnerability Management

Effective cloud vulnerability management provides several key benefits that are essential for securing your cloud environment. Let's explore these features in more detail:

Better Security

Cloud vulnerability management ensures that your cloud environment is continuously monitored for vulnerabilities. By identifying and addressing these weaknesses, you reduce the attack surface and lower the risk of data breaches or other security incidents. This proactive approach to security is essential in an ever-evolving threat landscape.

# Code snippet for vulnerability scanning

import security_scanner

# Initialize the scanner

scanner = security_scanner.Scanner()

# Run a vulnerability scan

scan_results = scanner.scan_cloud_resources()

Cost-Effective

By preventing security incidents and data breaches, cloud vulnerability management helps you avoid potentially significant financial losses and reputational damage. The cost of implementing a vulnerability management system is often far less than the potential costs associated with a security breach.

# Code snippet for cost analysis

def calculate_potential_cost_of_breach():

# Estimate the cost of a data breach

return potential_cost

potential_cost = calculate_potential_cost_of_breach()

if potential_cost > cost_of vulnerability management:

print("Investing in vulnerability management is cost-effective.")

else:

print("The cost of vulnerability management is justified by potential savings.")

Highly Preventative

Vulnerability management is a proactive and preventive security measure. By addressing vulnerabilities before they can be exploited, you reduce the likelihood of a security incident occurring. This preventative approach is far more effective than reactive measures.

# Code snippet for proactive security

import preventive_security_module

# Enable proactive security measures

preventive_security_module.enable_proactive_measures()

Time-Saving

Cloud vulnerability management automates many aspects of the security process. This automation reduces the time required for routine security tasks, such as vulnerability scanning and reporting. As a result, your security team can focus on more strategic and complex security challenges.

# Code snippet for automated vulnerability scanning

import automated_vulnerability_scanner

# Configure automated scanning schedule

automated_vulnerability_scanner.schedule_daily_scan()

Steps in Implementing Cloud Vulnerability Management

Implementing cloud vulnerability management is a systematic process that involves several key steps. Let's break down these steps for a better understanding:

Identification of Issues

The first step in implementing cloud vulnerability management is identifying potential vulnerabilities within your cloud environment. This involves conducting regular vulnerability scans to discover security weaknesses.

# Code snippet for identifying vulnerabilities

import vulnerability_identifier

# Run a vulnerability scan to identify issues

vulnerabilities = vulnerability_identifier.scan_cloud_resources()

Risk Assessment

After identifying vulnerabilities, you need to assess their risk. Not all vulnerabilities are equally critical. Risk assessment helps prioritize which vulnerabilities to address first based on their potential impact and likelihood of exploitation.

# Code snippet for risk assessment

import risk_assessment

# Assess the risk of identified vulnerabilities

priority_vulnerabilities = risk_assessment.assess_risk(vulnerabilities)

Vulnerabilities Remediation

Remediation involves taking action to fix or mitigate the identified vulnerabilities. This step may include applying patches, reconfiguring cloud resources, or implementing access controls to reduce the attack surface.

# Code snippet for vulnerabilities remediation

import remediation_tool

# Remediate identified vulnerabilities

remediation_tool.remediate_vulnerabilities(priority_vulnerabilities)

Vulnerability Assessment Report

Documenting the entire vulnerability management process is crucial for compliance and transparency. Create a vulnerability assessment report that details the findings, risk assessments, and remediation efforts.

# Code snippet for generating a vulnerability assessment report

import report_generator

# Generate a vulnerability assessment report

report_generator.generate_report(priority_vulnerabilities)

Re-Scanning

The final step is to re-scan your cloud environment periodically. New vulnerabilities may emerge, and existing vulnerabilities may reappear. Regular re-scanning ensures that your cloud environment remains secure over time.

# Code snippet for periodic re-scanning

import re_scanner

# Schedule regular re-scans of your cloud resources

re_scanner.schedule_periodic_rescans()

By following these steps, you establish a robust cloud vulnerability management program that helps secure your cloud environment effectively.

Challenges with Cloud Vulnerability Management

While cloud vulnerability management offers many advantages, it also comes with its own set of challenges. Some of the common challenges include:

| Challenge |

Description |

| Scalability |

As your cloud environment grows, managing and monitoring vulnerabilities across all resources can become challenging. |

| Complexity |

Cloud environments can be complex, with numerous interconnected services and resources. Understanding the intricacies of these environments is essential for effective vulnerability management. |

| Patch Management |

Keeping cloud resources up to date with the latest security patches can be a time-consuming task, especially in a dynamic cloud environment. |

| Compliance |

Ensuring compliance with industry standards and regulations can be challenging, as cloud environments often require tailored configurations to meet specific compliance requirements. |

| Alert Fatigue |

With a constant stream of alerts and notifications from vulnerability scanning tools, security teams can experience alert fatigue, potentially missing critical security issues. |

Cloud Vulnerability Management Best Practices

To overcome the challenges and maximize the benefits of cloud vulnerability management, consider these best practices:

- Automation: Implement automated vulnerability scanning and remediation processes to save time and reduce the risk of human error.

- Regular Training: Keep your security team well-trained and updated on the latest cloud security best practices.

- Scalability: Choose a vulnerability management solution that can scale with your cloud environment.

- Prioritization: Use risk assessments to prioritize the remediation of vulnerabilities effectively.

- Documentation: Maintain thorough records of your vulnerability management efforts, including assessment reports and remediation actions.

- Collaboration: Foster collaboration between your security team and cloud administrators to ensure effective vulnerability management.

- Compliance Check: Regularly verify your cloud environment's compliance with relevant standards and regulations.

Tools to Help Manage Cloud Vulnerabilities

To assist you in your cloud vulnerability management efforts, there are several tools available. These tools offer features for vulnerability scanning, risk assessment, and remediation.

Here are some popular options:

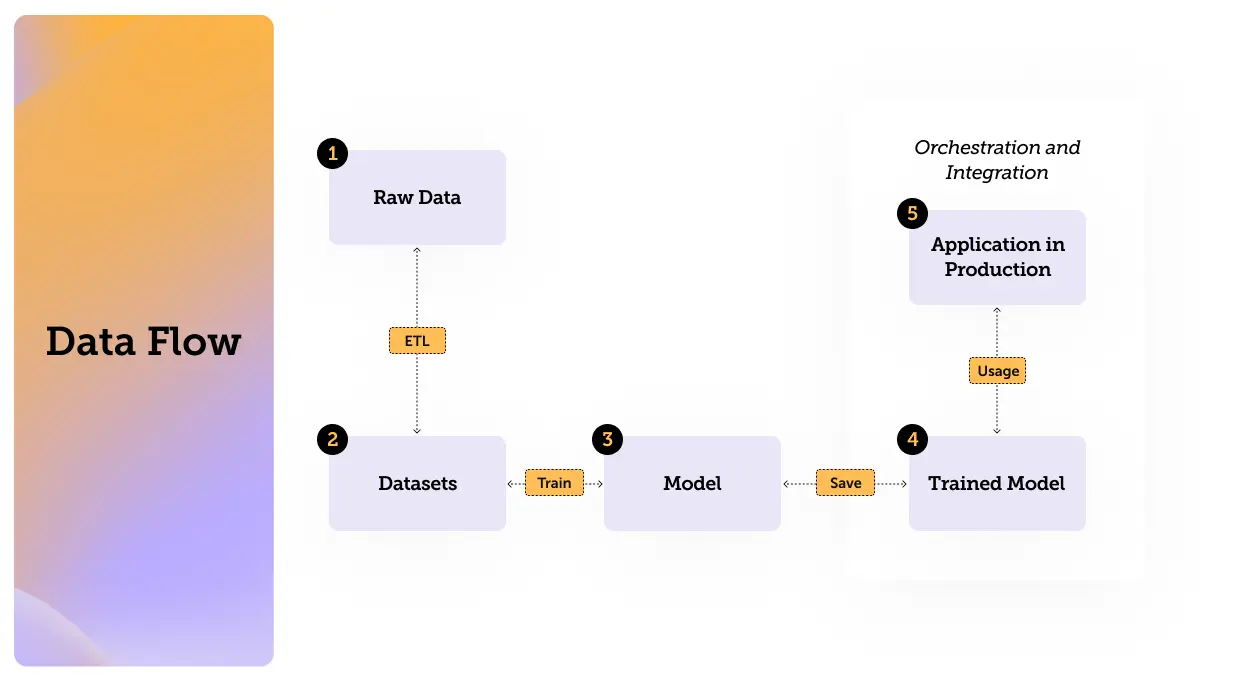

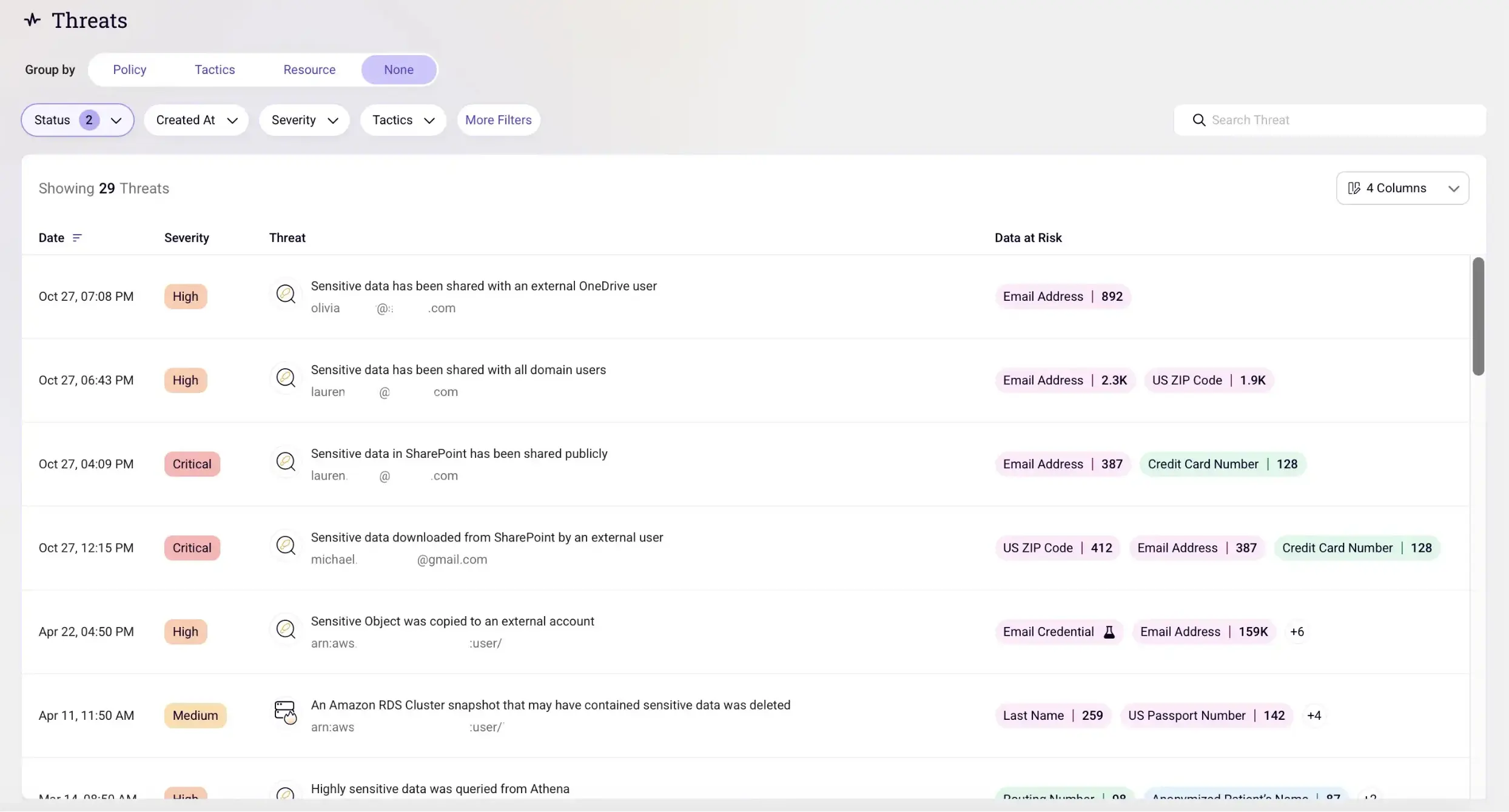

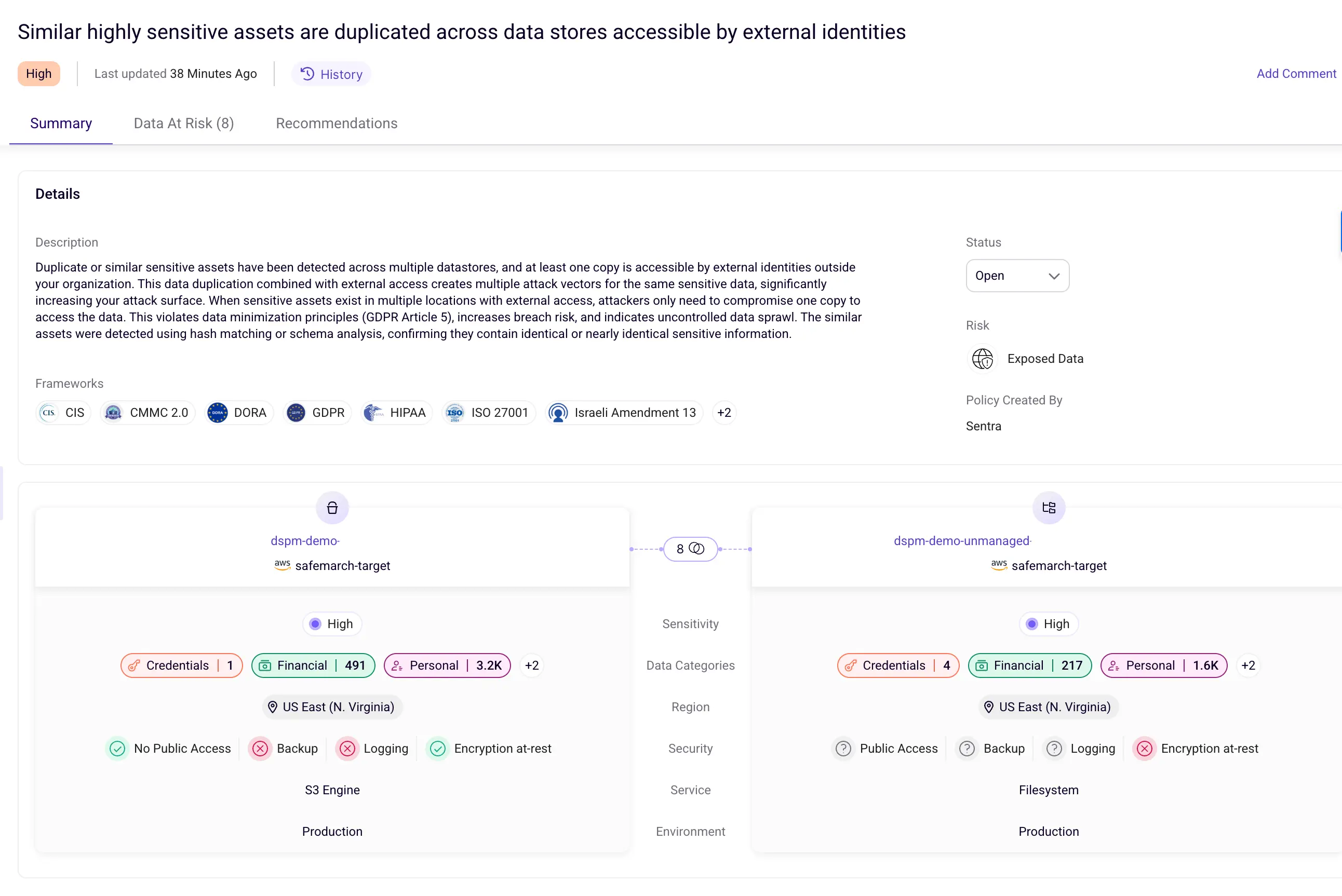

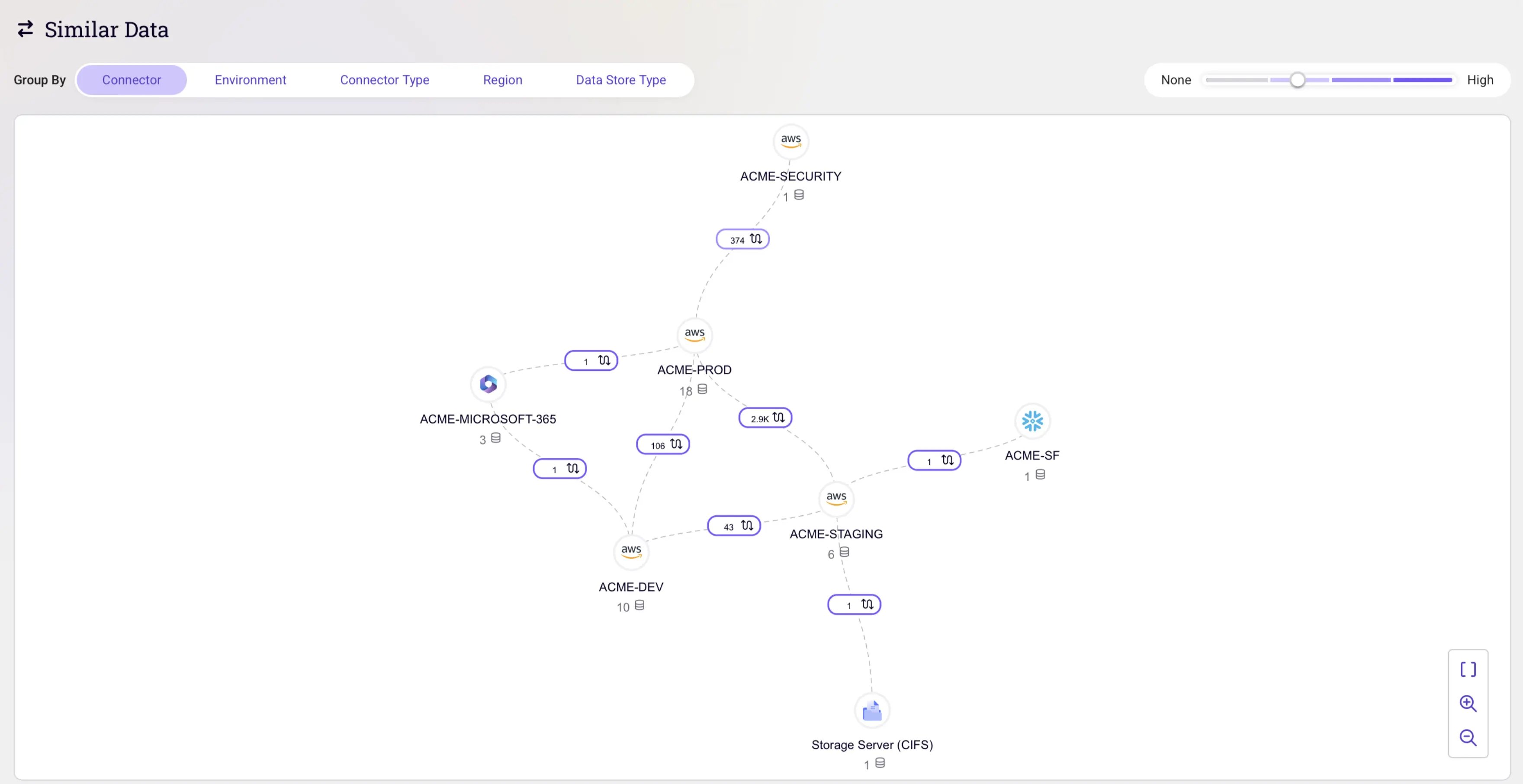

1. Sentra: Sentra is a cloud-based data security platform that provides visibility, assessment, and remediation for data security. It can be used to discover and classify sensitive data, analyze data security controls, and automate alerts in cloud data stores, IaaS, PaaS, and production environments.

2. Tenable Nessus: A widely-used vulnerability scanner that provides comprehensive vulnerability assessment and prioritization.

3. Qualys Vulnerability Management: Offers vulnerability scanning, risk assessment, and compliance management for cloud environments.

4. AWS Config: Amazon Web Services (AWS) provides AWS Config, as well as other AWS cloud security tools, to help you assess, audit, and evaluate the configurations of your AWS resources.

5. Azure Security Center: Microsoft Azure's Security Center offers Azure Security tools for continuous monitoring, threat detection, and vulnerability assessment.

6. Google Cloud Security Scanner: A tool specifically designed for Google Cloud Platform that scans your applications for vulnerabilities.

7. OpenVAS: An open-source vulnerability scanner that can be used to assess the security of your cloud infrastructure.

Choosing the right tool depends on your specific cloud environment, needs, and budget. Be sure to evaluate the features and capabilities of each tool to find the one that best fits your requirements.

Conclusion

In an era of increasing cyber threats and data breaches, cloud vulnerability management is a vital practice to secure your cloud environment. By understanding common cloud vulnerabilities, implementing effective mitigation strategies, and following best practices, you can significantly reduce the risk of security incidents. Embracing automation and utilizing the right tools can streamline the vulnerability management process, making it a manageable and cost-effective endeavor.

Remember that security is an ongoing effort, and regular vulnerability scanning, risk assessment, and remediation are crucial for maintaining the integrity and safety of your cloud infrastructure. With a robust cloud vulnerability management program in place, you can confidently leverage the benefits of the cloud while keeping your data and assets secure.

See how Sentra identifies cloud vulnerabilities that put sensitive data at risk.

<blogcta-big>