Cloud Data Breaches: Cloud vs On Premise Security

"The cloud is more secure than on prem.” This has been taken for granted for years, and is one of the many reasons companies are adopting a ‘cloud first mentality’. But when it comes to data breaches this isn’t always the case.

That’s why you still can’t find a good answer to the question “Is the cloud more secure than on-premise?”

Because like everything else in security, the answer is always ‘it depends’. While having certain security aspects managed by the cloud provider is nice, it’s hardly comprehensive. The cloud presents its own set of data security concerns that need to be addressed.

In this blog, we’ll be looking at data breaches in the cloud vs on premises. What are the unique data security risks associated with both use cases, and can we definitively say one is better at mitigating the risks of data breaches?

On Premises Data Security

An on-premise architecture is the traditional way organizations manage their networks and data. The company’s servers, hardware, software, and network are all managed directly by the IT department, which assumes full control over uptime, security, and data. While more labor intensive than cloud infrastructures, on-premise architectures have the advantage of having a perimeter to defend. Unlike the cloud, IT and security teams also know exactly where all of their data is - and where it’s supposed to be. Even if data is duplicated without authorization, it’s duplicated in the on-prem server, with existing perimeter protections in place. The advantage of these solutions can’t be overstated. IT has decades of experience managing on-premise servers and there are hundreds of tested products on the market that do an excellent job of securing an on-prem perimeter.

Despite these advantages, around half of data breaches are still from on-premise architectures rather than cloud. This is caused by a number of factors. Most importantly, cloud providers like Amazon Web Services, Azure, and GCP are responsible for some aspects of security. Additionally, while securing a perimeter might be more straightforward than the defense in depth approach required for the cloud, it’s also easier for attackers to find and exploit on-premise vulnerabilities by easily searching public exploit databases and then finding organizations that haven’t patched the relevant vulnerability.

Data Security in the Cloud

Infrastructure as a Service (IaaS) Cloud computing runs on a ‘shared responsibility model’. The cloud provider is responsible for the hardware, so they provide the physical security, but protecting the software, applications, and data is still the enterprise’s responsibility. And while some data leaks are the result of poor physical security, many of the major leaks today are the result of misconfigurations and vulnerabilities, not someone physically accessing a hard drive.

So when people claim the cloud is better for data security than on premises, what exactly do they mean?

Essentially they’re saying that data in the cloud is more secure when the cloud is correctly set up. And no, this is not as obvious as it sounds. Because by definition the cloud needs to be accessed through the internet, that also makes it shockingly easy to accidentally expose data to everyone through the internet.

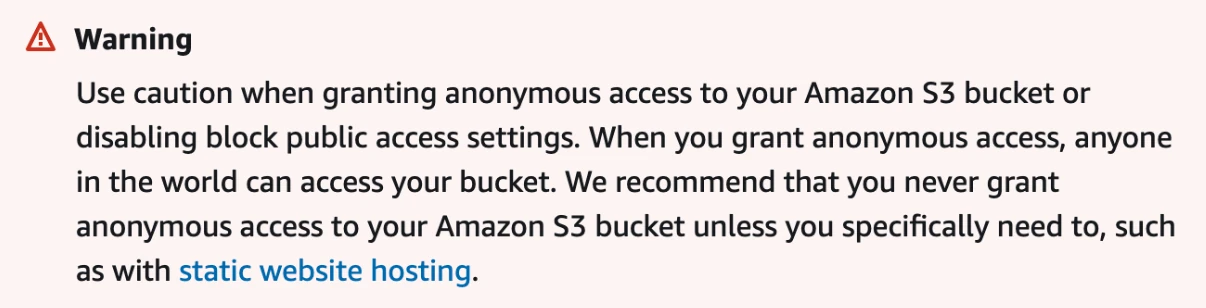

For example, S3 buckets that are improperly configured have been responsible for some of the most well known cloud data breaches, including Booz Allen Hamilton , Accenture, and Prestige Software. This just isn’t a concern for on-prem organizations. There’s also the matter of the quantity of data being created in the cloud. Because the cloud is provisioned on demand, developers and engineers can easily duplicate databases and applications, and accidentally expose the duplicates to the internet.

Amazon’s warning against leaving buckets exposed to the internet

Securing your cloud against data breaches is also complicated by the lack of a definable perimeter. When everything is accessible via the internet with the right credentials, guarding a ‘perimeter’ isn’t possible. Instead cloud security teams manage a range of security solutions designed to protect different elements of their cloud - the applications, the networking, the data, etc. And they have to do all of this without slowing down business processes. The whole advantage of moving to the cloud is speed and scalability. If security prevents scalability, the benefits of the cloud vanish.

So we see with the cloud there’s a basic level of security features you need to enable. The good news is that once those features are enabled, the cloud is much harder for an attacker to navigate. There’s monitoring built in to which makes breaches more difficult. It’s also a lot more difficult to understand a cloud architecture than an on-premise one, which means that attackers either have to be more sophisticated or they just go for the low-hanging fruit (exposed s3 buckets being a good example of this).

However, once you have your monitoring built in, there’s still one challenge facing cloud-first organizations. That’s the data. No matter how many cloud security experts you have, there’s data being constantly created in the cloud that security may not even be aware exists. There’s no issue of visibility on premises - we know where the data is. It’s on the server we’re managing. In the cloud, there’s nothing stopping developers from duplicating data, moving it between environments, and forgetting about it completely (also known as shadow data). Even if you were able to discover the data, it’s no longer clear where it came from, or what security posture it’s supposed to have. Data sprawl leading to a loss of visibility, context, which damages your security posture is the primary cloud security challenge.

So what’s the verdict on data breaches in the cloud vs data breaches on premises? Which is riskier or more likely?

Is the Cloud More Secure Than On Premise?

Like we warned in the beginning, the answer is an unsatisfying “it depends”. If your organization properly manages the cloud, configures the basic security features, limits data sprawl, and has cloud experts managing your environment, the cloud can be a fortress. Ultimately though, this may not be a conversation most enterprises are having in the coming years. With the advantages of scalability and speed, many new enterprises are cloud-first and the question won’t be ‘is the cloud secure’ but is our cloud’s data secure.

<blogcta-big>

.webp)